为 Apache Iceberg™ 表使用目录链接的数据库¶

通过目录链接的数据库,您可以在 Snowflake 中访问多个远程 Iceberg 表,而无需创建单独的 外部管理表。

目录链接的数据库是一种连接到外部 Iceberg REST 目录的 Snowflake 数据库。Snowflake 会自动与外部目录同步以检测命名空间和 Iceberg 表,并将远程表注册到目录链接的数据库。目录链接的数据库还支持创建和删除架构或 Iceberg 表。

Billing for catalog-linked databases¶

Snowflake bills your account for the following usage:

Automatic table discovery, create schema, drop schema, and drop table. Snowflake will bill your account for this usage under the CREDITS_USED_CLOUD_SERVICES usage type. Usage for cloud services is charged only if the daily consumption of cloud services exceeds 10% of the daily usage of virtual warehouses. For more information, see 了解云服务使用计费.

Create table. Snowflake will bill your account for this usage under the CREDITS_USED_COMPUTE usage type through auto refresh. The cost for this usage is described in Table 5 of the Snowflake service consumption table on the Snowflake website. Refer to the Snowflake-managed compute column for the Automated Refresh and Data Registration row.

Snowflake won't bill you for any cloud services that you use during table creation.

备注

To view the credit usage for your catalog-linked databases, use the CATALOG_LINKED_DATABASE_USAGE_HISTORY 视图.

Workflow to configure access to your external catalog and table storage¶

以下步骤介绍如何创建目录链接的数据库、检查 Snowflake 与目录之间的同步状态,以及在数据库中创建或查询表。

Configure access to your external catalog and table storage¶

Before you create a catalog-linked database, you need to configure access to your external catalog and table storage. To configure this access, you configure a catalog integration with vended credentials. With this option, your remote Iceberg catalog must support credential vending.

有关说明,请参阅 为 Apache Iceberg™ 表使用由目录分发的凭据。

备注

If your remote Iceberg catalog doesn't support credential vending, you must configure an external volume and a catalog integration to configure access to your external catalog and table storage. First, configure an external volume for your cloud storage provider. Then, configure a Apache Iceberg™ REST catalog integration for your remote Iceberg catalog.

创建目录链接数据库¶

使用 CREATE DATABASE(目录链接) 命令创建目录链接的数据库:

The following example creates a catalog-linked database that uses vended credentials. The sync interval is 30 seconds, which is the default. The sync interval tells Snowflake how often to poll your remote catalog.

CREATE DATABASE my_linked_db

LINKED_CATALOG = (

CATALOG = 'my_catalog_int'

);

备注

To create a catalog-linked database that uses an external volume, see CREATE DATABASE(目录链接), including the example.

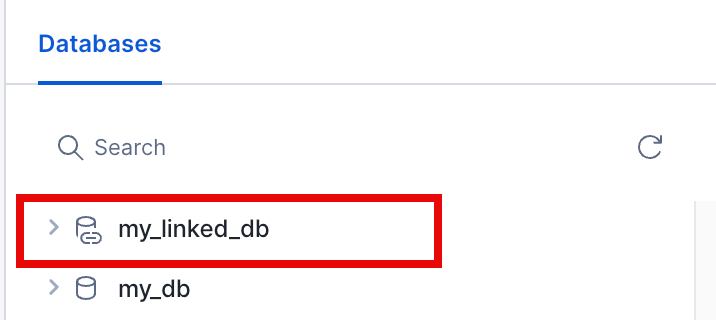

Your catalog-linked database includes a link icon.

检查目录同步状态¶

要检查 Snowflake 是否已成功将远程目录链接至数据库,请使用 SYSTEM$CATALOG_LINK_STATUS 函数。

该函数还可提供相关信息,帮助您识别远程目录中同步失败的表。

SELECT SYSTEM$CATALOG_LINK_STATUS('my_linked_db');

Identify tables that were created but couldn't be initialized¶

To identify tables in the remote catalog that synced successfully but fail to refresh automatically, run the SHOW ICEBERG TABLES

command, and then refer to the auto_refresh_status column in the output. These tables

have an executionState of ICEBERG_TABLE_NOT_INITIALIZED in the output.

For example, Snowflake might successfully discover and create a table in your remote catalog to your catalog-linked database, but this table has a corrupted data file in your remote catalog. As a result, Snowflake can't automatically refresh the table until you resolve the error.

Automated refresh is turned off for these kinds of tables, so querying the table in Snowflake returns an error that says the table was never initialized. To query the table, you must fix the error, and then turn on automated refresh for the table.

Query a table in your catalog-linked database¶

创建目录链接的数据库后,Snowflake 会启动表发现过程,并自动使用 SYNC_INTERVAL_SECONDS 参数值(默认间隔为 30 秒)轮询已链接的目录以检查变更。

在数据库中,远程目录的允许命名空间将显示为架构,而 Iceberg 表将出现在其各自的架构之下。

您可以使用 SELECT 语句查询远程表。

备注

For the requirements for identifying objects in a catalog-linked database, see Requirements for identifier resolution in a catalog-linked database.

有关对象标识符的更多信息,请参阅 标识符要求。

例如:

USE DATABASE my_linked_db;

SELECT * FROM my_namespace.my_iceberg_table

LIMIT 20;

写入远程目录¶

您可以使用 Snowflake 在已链接目录中创建命名空间和 Iceberg 表。有关详细信息,请参阅以下主题:

Requirements for identifier resolution in a catalog-linked database¶

The requirement for resolving an identifier depends on the following:

The value that you specified for the CATALOG_CASE_SENSITIVITY parameter when you created your catalog-linked database

Whether your external Iceberg catalog uses case-sensitive or case-insensitive identifiers.

备注

These requirements apply to identifying existing schemas, tables, and table columns. They also include some special cases for creating or altering an object.

When you create a new schema, table, or column in a case-sensitive catalog such as AWS Glue or Unity Catalog, you must use lowercase letters and surround the schema, table, and column names in double quotes. This is also required for other Iceberg REST catalogs that only support lowercase identifiers.

The following table shows the requirement for each scenario:

CATALOG_CASE_SENSITIVITY value |

External Iceberg catalog uses |

Requirement |

|---|---|---|

CASE_SENSITIVE |

Case sensitive identifiers |

Snowflake matches identifiers exactly as they appear, including case. Snowflake automatically converts unquoted identifiers to uppercase, but quoted identifiers must match exactly the case in your external catalog. The following example shows a valid query for creating a table: CREATE TABLE "Table1" (id INT, name STRING);

Snowflake creates the table in the external catalog as The following example shows a valid query for selecting the SELECT * FROM "Table1";

In the previous example, the double quotes are required for matching the capitalization exactly. The following example shows an invalid query, unless a SELECT * FROM table1;

In the previous example, the query is invalid if The following example shows an invalid query for the case when an all uppercase SELECT * FROM TABLE1;

|

CASE_SENSITIVE |

Case insensitive identifiers |

If the external Iceberg catalog is actually case insensitive, and normalizes to lowercase, you must surround identifiers in double quotes. The following example shows valid queries: SELECT * from "s1";

SELECT * from "lowercasetablename";

|

CASE_INSENSITIVE |

Case insensitive identifiers |

|

CASE_INSENSITIVE |

Case sensitive identifiers |

If the external Iceberg catalog is actually case sensitive, Snowflake treats unquoted identifiers as case-insensitive and automatically converts unquoted identifiers to uppercase. When you create or query objects, Snowflake matches identifiers regardless of case, as long as they are unquoted. Using this pattern is discouraged because Snowflake can't resolve two different identifiers that differ in casing. This pattern only works when no two identifiers are different in casing only. Consider the case where the remote catalog has a SELECT * from table1;

SELECT * from TABLE1;

SELECT * from Table1;

SELECT * from "Table1";

Quoted identifiers preserve case and match exactly. However, in CASE_INSENSITIVE mode, unquoted and quoted forms are both supported. |

Considerations for using a catalog-linked database for Iceberg tables¶

Consider the following items when you use a catalog-linked database:

仅当您使用 Iceberg REST 的目录集成(例如 Snowflake Open Catalog)时才支持。

要将自动表发现限制为一组特定的命名空间,请使用 ALLOWED_NAMESPACES 参数。您也可以使用 BLOCKED_NAMESPACES 参数来屏蔽一组命名空间。

Snowflake doesn't sync remote catalog access control for users or roles.

您可以在目录链接的数据库中创建架构或外部管理的 Iceberg 表。目前不支持创建其他 Snowflake 对象。

You can't create database roles in a catalog-linked database.

延迟:

对于链接到远程目录中 7,500 个命名空间的数据库,发现命名空间和表大约需要一小时。

对于包含 500,000 个表的远程目录,自动刷新过程大约需要一小时才能完成。对于具有不同延迟要求的命名空间,我们建议您创建单独的目录链接的数据库。每个数据库都应引用具有相应自动刷新间隔 (REFRESH_INTERVAL_SECONDS) 的目录集成。

对于目录链接的数据库中的 Iceberg 表:

Snowflake 不复制远程目录表属性(例如保留策略或缓冲区),并且目前不支持更改表属性。

默认情况下,自动刷新 处于启用状态。如果外部表的

table-uuid和目录链接的数据库表不匹配,则刷新失败,Snowflake 将该表从目录链接的数据库中删除;Snowflake 不会更改远程表。如果您从远程目录中删除表,Snowflake 会将该表从目录链接的数据库中删除。此操作是异步进行的,因此您可能不会立即在远程目录中看到更改。

如果您重命名远程目录中的表,Snowflake 会从目录链接的数据库中删除现有表,并使用新名称创建一个表。

Masking policies and tags are supported. Other Snowflake-specific features, including replication and cloning, aren't supported.

The character that you choose for the NAMESPACE_FLATTEN_DELIMITER parameter can't appear in your remote namespaces. During the auto discovery process, Snowflake skips any namespace that contains the delimiter, and doesn't create a corresponding schema in your catalog-linked database.

If you specify anything other than

_,$, or numbers for the NAMESPACE_FLATTEN_DELIMITER parameter, you must put the schema name in quotes when you query the table.对于链接到 AWS Glue 的数据库,必须使用小写字母,并将架构、表和列名称用双引号引起来。对于其他仅支持小写标识符的 Iceberg REST 目录,这也是必需的。

以下示例显示了有效查询:

CREATE SCHEMA "s1";

以下语句无效,因为它们使用大写字母或省略了双引号:

CREATE SCHEMA s1; CREATE SCHEMA "Schema1";

不支持使用 UNDROP ICEBERG TABLE。

Sharing:

Sharing with a listing isn't currently supported

Direct sharing is supported

写入目录链接的数据库中的表:

Creating tables in nested namespaces isn't currently supported.

目前不支持写入嵌套命名空间中的表。

Position row-level deletes (https://iceberg.apache.org/spec/#row-level-deletes) are supported for tables stored on Amazon S3, Azure, or Google Cloud. Row-level deletes with equality delete files aren’t supported. For more information about row-level deletes, see 使用行级删除. To turn off position deletes, which enable running the Data Manipulation Language (DML) operations in copy-on-write mode, set the

ENABLE_ICEBERG_MERGE_ON_READparameter to FALSE at the table, schema, or database level.