SnowConvert AI - Best practices¶

1. Extraction¶

We highly recommend you use our scripts to extract your workload:

Teradata: Click here (https://github.com/Snowflake-Labs/SC.DDLExportScripts/blob/main/Teradata/README.md).

Oracle: Click here (https://github.com/Snowflake-Labs/SC.DDLExportScripts/blob/main/Oracle/README.md).

SQLServer: Click here (https://github.com/Snowflake-Labs/SC.DDLExportScripts/blob/main/SQLServer/README.pdf).

Redshift: Click here.

2. Preprocess¶

We highly recommend you use a Preprocess Script that aims to give you better results before starting an assessment or a conversion. This script performs the following tasks:

Create a single file for each top-level object

Organize each file by a defined folder hierarchy (The default is: Database Name -> Schema Name -> Object Type)

Generate an inventory report that provides information on all the objects that are in the workload.

2.1 Download¶

Please click here (https://sctoolsartifacts.z5.web.core.windows.net/tools/extractorscope/standardize_sql_files) to download the binary of the script for MacOs (make sure to follow the setup on 2.3).

Please click here (https://sctoolsartifacts.z5.web.core.windows.net/tools/extractorscope/standardize_sql_files.exe) to download the binary of the script for Windows.

2.2 Description¶

The following information is needed to run the script:

Script Argument |

Example Value |

Required |

Usage |

|---|---|---|---|

Input folder |

|

Yes |

|

Output folder |

|

Yes |

|

Database name |

|

Yes |

|

Database engine |

|

Yes |

|

Output folder structure |

|

No |

|

Pivot tables generation |

|

No |

|

Note

The supported values for the database engine argument (-e) are: oracle, mssql and teradata

Note

The supported values for the output folder structure argument (-s) are: database_name, schema_name and top_level_object_name_type.

When specifying this argument all the previous values need to be separated by a comma (e.g., -s database_name,top_level_object_name_type,schema_name).

This argument is optional and when it is not specified the default structure is the following: Database name, top-level object type and schema name.

Note

The pivot tables generation parameter (-p) is optional.

2.3 Setup the binary for Mac¶

Set the binary as an executable:

chmod +x standardize_sql_filesRun the script by executing the following command:

./standardize_sql_filesIf this is the first time running the binary the following message will pop-up:

Click OK.

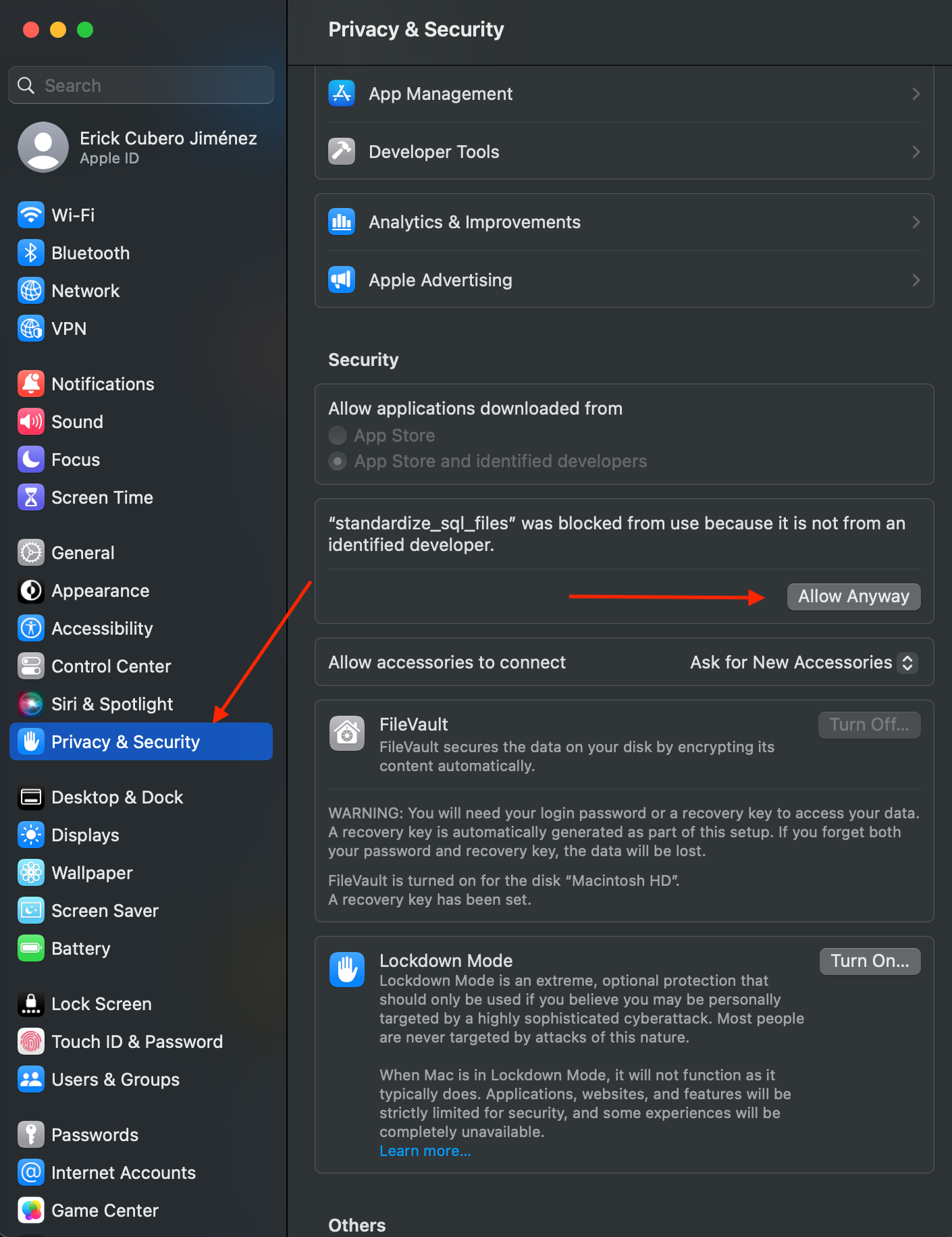

Click OK.Open Settings -> Privacy & Security -> Click Allow Anyway

Running the script¶

Running the script using the following format:

Mac format

./standardize_sql_files -i "input path" -o "output path" -d Workload1 -e teradataWindows format

./standardize_sql_files.exe -i "input path" -o "output path" -d Workload1 -e teradata

If the script is successfully executed the following output will be displayed:

Splitting process completed successfully!

Report successfully created!

Script successfully executed!