SnowConvert AI - ETL Migration¶

SnowConvert AI provides powerful ETL migration capabilities to help you modernize legacy ETL workflows and migrate them to cloud-native architectures on Snowflake. The Replatform feature converts traditional ETL packages into modern data transformation frameworks like dbt (data build tool), while preserving orchestration logic using Snowflake’s native TASKs and stored procedures.

This guide focuses on migrating SSIS (SQL Server Integration Services) (https://learn.microsoft.com/en-us/sql/integration-services/sql-server-integration-services?view=sql-server-ver17) packages to dbt projects on Snowflake. You’ll learn about the migration process, understand the generated output structure, and discover how to work with the migrated code.

SSIS Replatform¶

The SSIS Replatform feature migrates your SQL Server Integration Services packages to a modern, cloud-native architecture on Snowflake. SSIS packages are decomposed into two primary components:

Data Flow Tasks → Converted to dbt projects for data transformation

Control Flow logic → Converted to Snowflake TASKs or stored procedures for orchestration

This section describes the step-by-step process to migrate your SSIS projects to dbt projects on Snowflake using SnowConvert AI.

Prerequisites¶

Before you begin, ensure you have the following:

SnowConvert AI is installed with a valid license (access code)

Source dependencies accessible in Snowflake (required for running the migrated dbt project, not for the migration itself)

DTSX package files extracted from ISPAC files (ISPAC files aren’t supported directly)

SSIS package version 8 or later (for earlier versions, upgrade your packages (https://learn.microsoft.com/en-us/sql/integration-services/install-windows/upgrade-integration-services-packages-using-the-ssis-package-upgrade-wizard?view=sql-server-ver17) first)

Migration Steps¶

Follow these steps to migrate your SSIS project:

Step 1: Select the path to your scripts¶

Include DDL scripts for all dependencies to ensure high-quality migrated code. The migration process uses these scripts to identify data types and constraints.

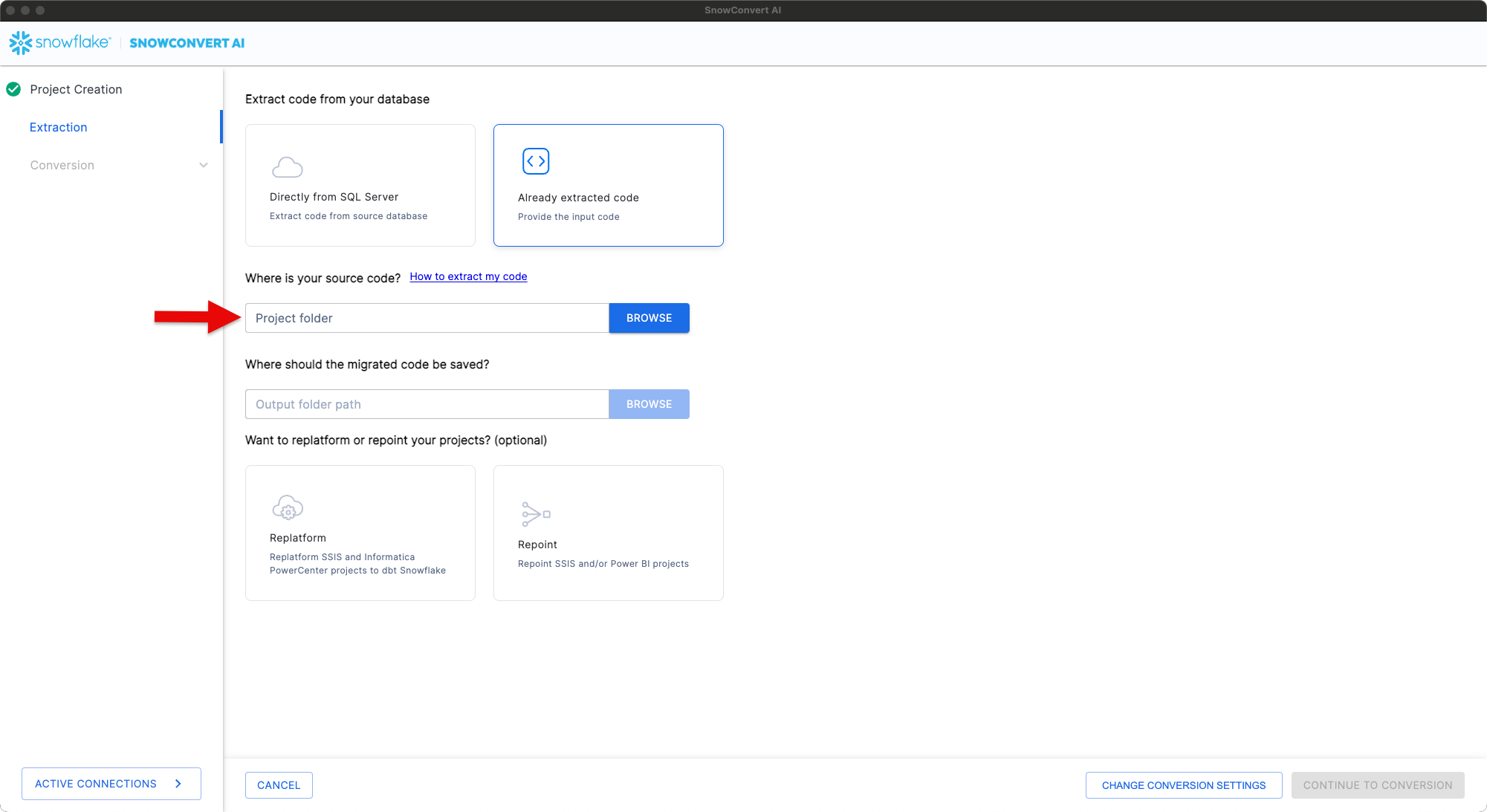

Select the path to your scripts in SnowConvert AI¶

Step 2: Click the Replatform card¶

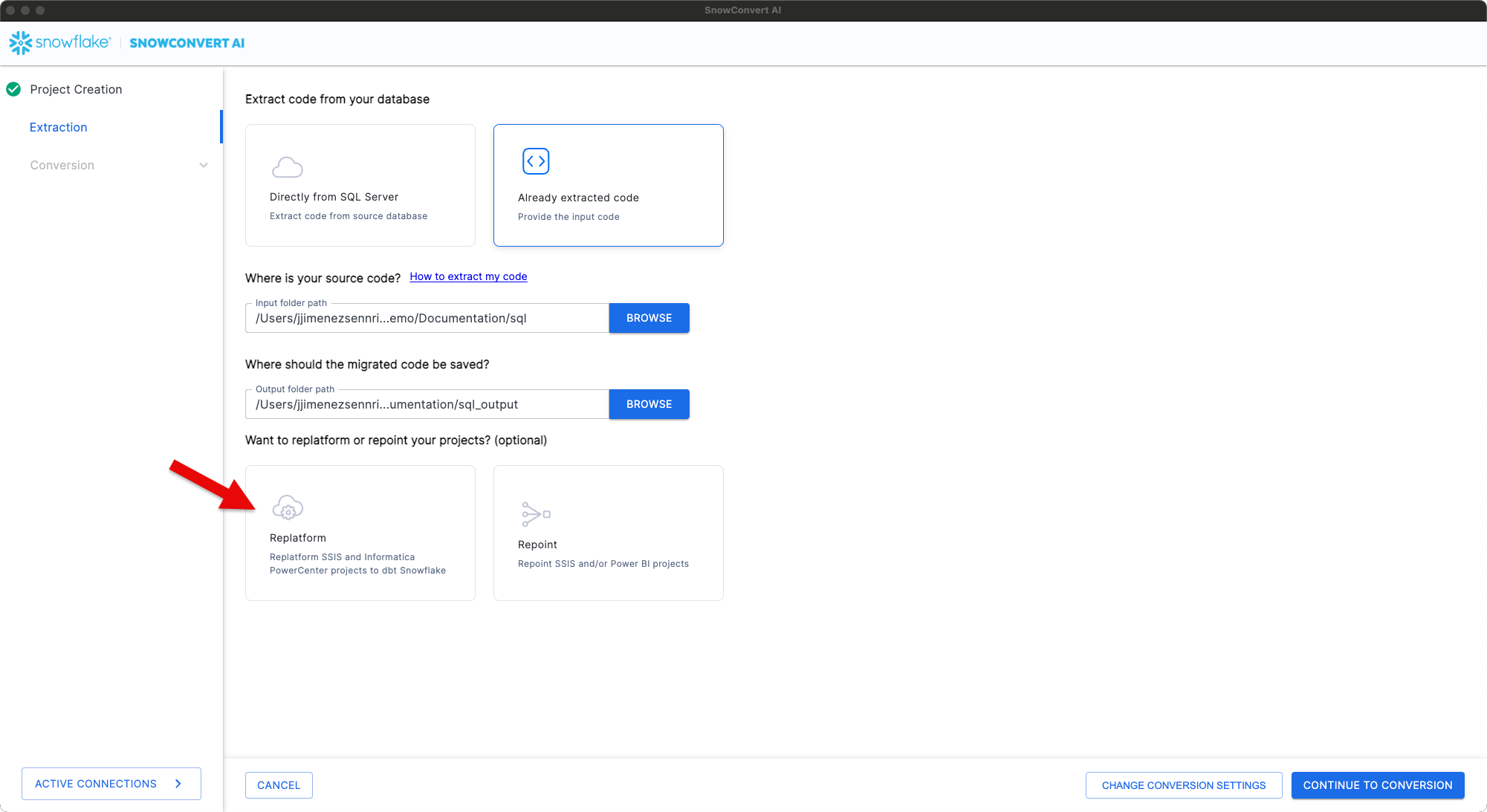

Click the Replatform card to begin the migration. If you don’t see this option, update SnowConvert AI to the latest version, or contact support.

Click the Replatform card¶

Step 3: Browse to your SSIS project location¶

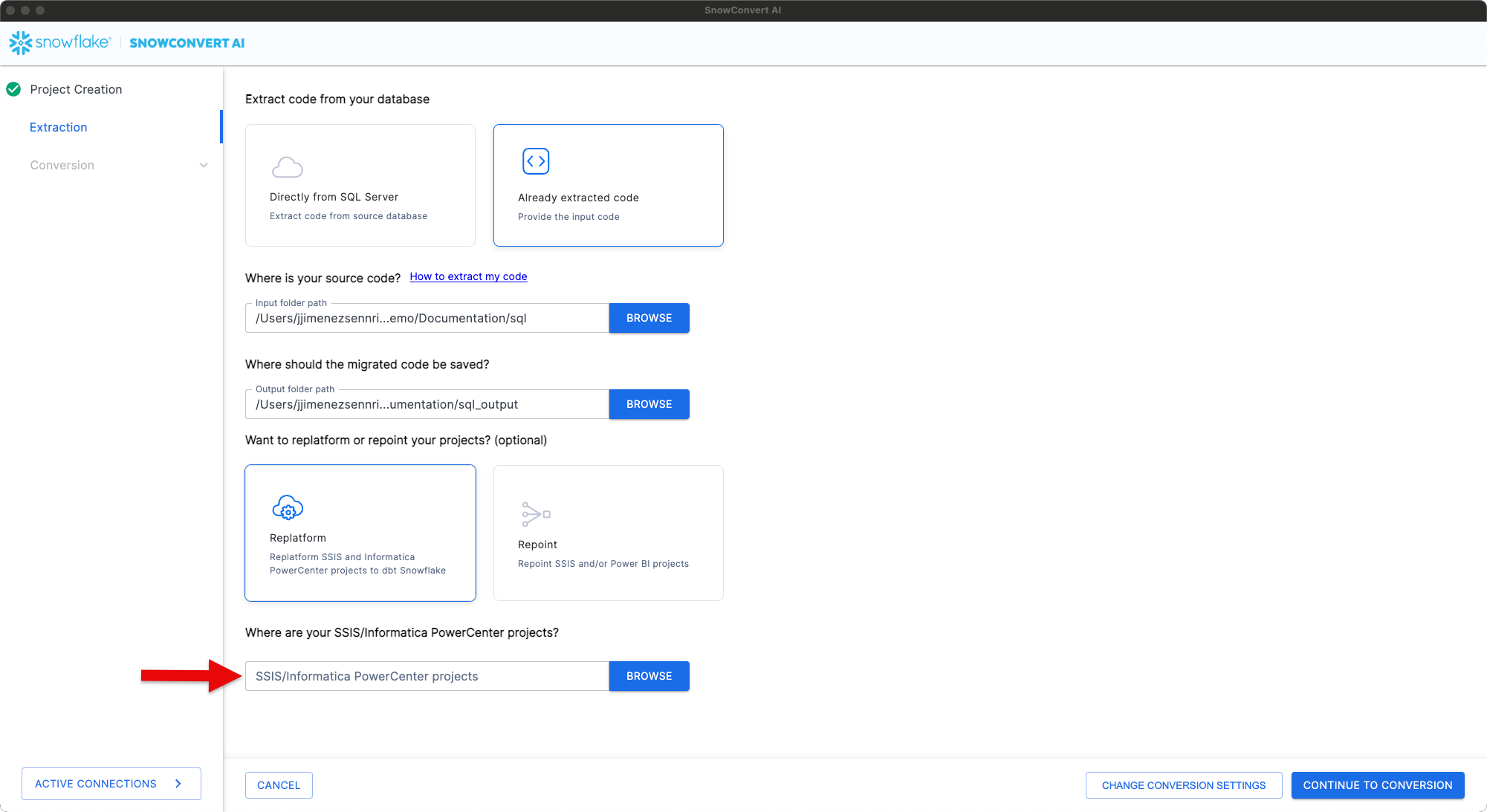

Click Browse and navigate to your SSIS project folder

Ensure the folder contains DTSX files (required for migration)

Click Continue To Conversion

Browse to your SSIS project location and click Continue To Conversion¶

SnowConvert AI migrates your SSIS project and any scripts in the specified paths.

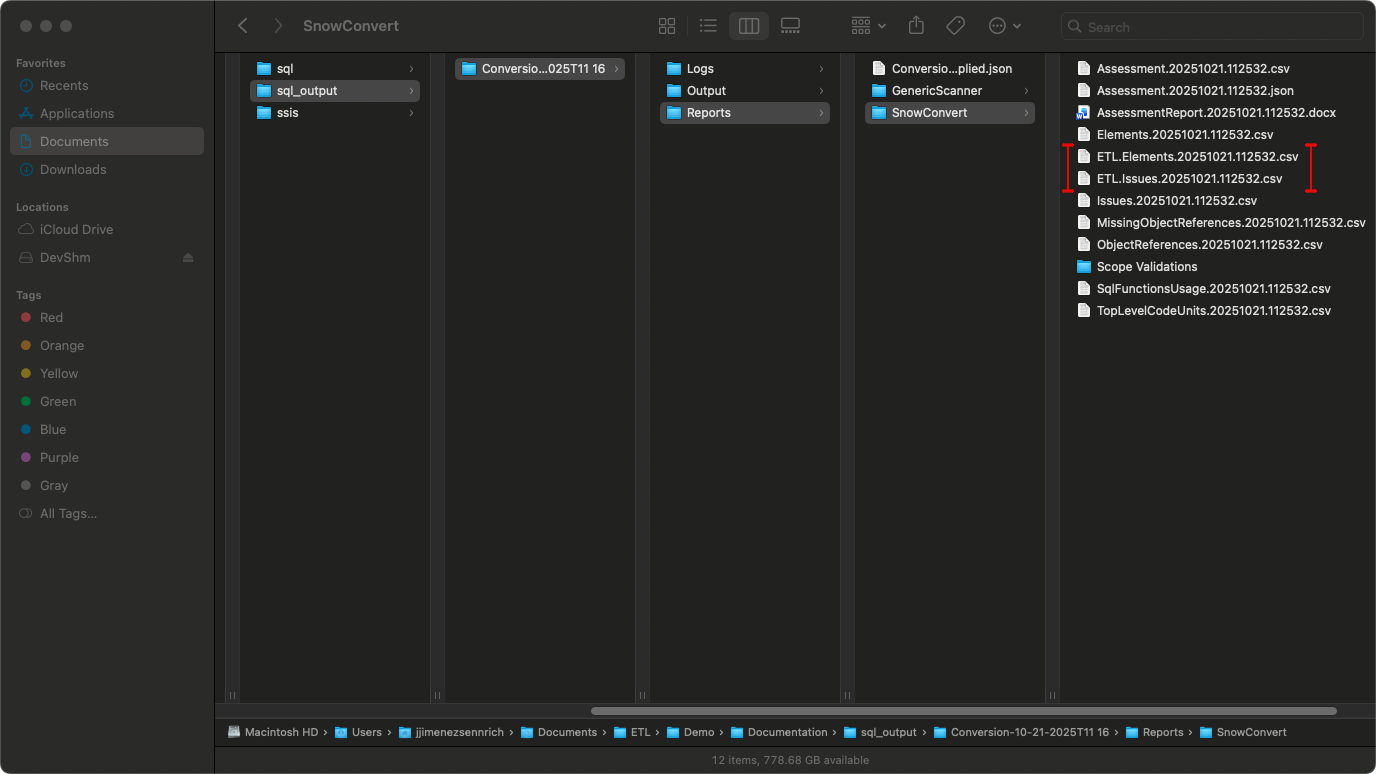

Step 4: Review the results¶

After migration completes:

Review the migration reports

Fix any issues identified in the reports

Fill placeholders in

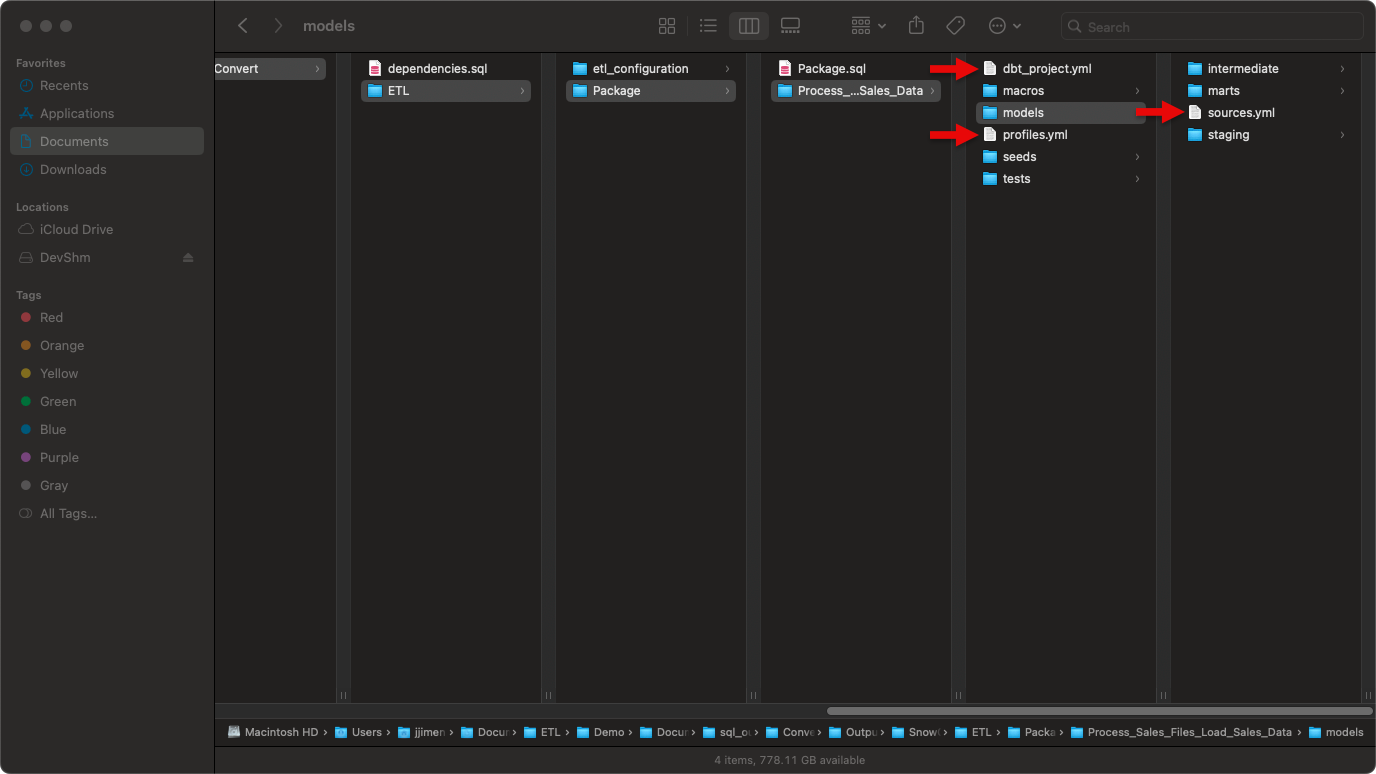

sources.yml,profiles.yml, anddbt_project.yml

Review the migration reports to identify any issues¶

Fill placeholders in sources.yml, profiles.yml, and dbt_project.yml¶

The output includes:

ETL/: Main folder containing all converted SSIS packages

etl_configuration/: Infrastructure components (control_variables table, UDFs, procedures)

{PackageName}/: Folder for each SSIS package containing:

{PackageName}.sql: Orchestration file (TASK or PROCEDURE)

{DataFlowTaskName}/: dbt project for each Data Flow Task

script.sql: Migrated SQL scripts (if applicable)

For a detailed description of the output structure, see Output Structure.

Step 5: Upload your dbt project¶

After you’ve reviewed the dbt project, filled placeholders in .yml files, and fixed EWIs, upload the project using one of these methods.

For more information about working with dbt projects on Snowflake, see Getting Started with dbt Projects.

Option A: Upload using Snowflake CLI¶

Run this command in your dbt project directory (replace values in italics with your schema, database, and package names):

snow dbt deploy --schema schema_name --database database_name --force package_name

If successful, skip to Step 6.

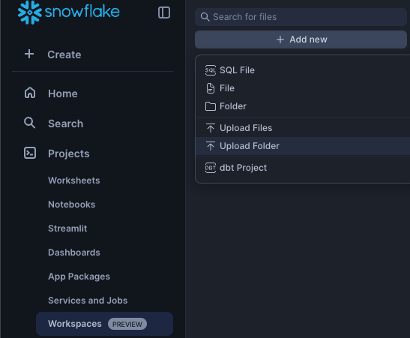

Option B: Upload via Snowflake Workspace¶

Navigate to Workspaces > Add new > Upload Folder and select your dbt project folder.

Navigate to Workspaces > Add new > Upload Folder¶

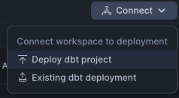

Deploy the dbt project to make it accessible for orchestration:

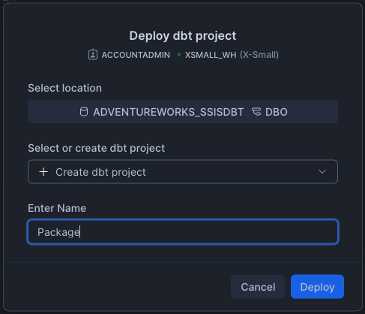

Click Connect > Deploy dbt project (top right corner)

Use the project name that matches your dbt project folder name

Example: For

Process_Sales_Files_Load_Sales_Data/, use “Process_Sales_Files_Load_Sales_Data”This name is referenced in the orchestration file via

EXECUTE DBT PROJECTcommands

Click Connect > Deploy dbt project (top right corner)¶

For example, if your orchestration uses public.Package:

EXECUTE DBT PROJECT public.Package ARGS='build --select tag:package_dataflowtask --target dev';

Use Package as your project name when deploying.

Deploy dbt project modal - enter the project name¶

Note: Deploy all dbt projects in your migration.

Step 6: Run your dbt project¶

Select the correct database and schema before running your project.

Select the correct database and schema before running¶

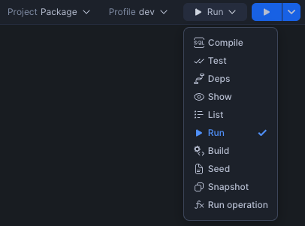

For single dataflow projects:

Run the dbt project directly if you have only one data flow.

Run the dbt project directly for single dataflow scenarios¶

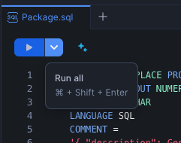

For multi-dataflow projects:

Run the orchestration SQL file to create all TASK objects

This creates the initialization TASK and all dependent TASKs

For reusable packages, this creates stored procedures instead

Click Run All to create all TASK objects for multi-dataflow projects¶

Execute the orchestration:

For TASK-based orchestration (standard packages):

-- Execute the root task

EXECUTE TASK public.Package;

For PROCEDURE-based orchestration (reusable packages):

-- Call the stored procedure

CALL public.PackageName();

Note: Check your generated SQL file to determine whether your package uses the TASK or PROCEDURE pattern.

Output Structure¶

SnowConvert generates an output structure that separates data transformation logic (dbt projects) from orchestration logic (Snowflake TASKs and PROCEDUREs).

Understanding this structure is essential for working with the migrated code.

Overview¶

SnowConvert organizes all migration output under the Output/ETL/ folder. Here’s the complete folder structure:

Output/

└── ETL/

├── etl_configuration/

│ ├── tables/

│ │ └── control_variables_table.sql

│ ├── functions/

│ │ └── GetControlVariableUDF.sql

│ └── procedures/

│ └── UpdateControlVariable.sql

├── {PackageName}/

│ ├── {PackageName}.sql # Main orchestration TASK

│ └── {DataFlowTaskName}/ # One dbt project per Data Flow Task

│ ├── dbt_project.yml

│ ├── profiles.yml

│ ├── models/

│ │ ├── sources.yml

│ │ ├── staging/

│ │ │ └── stg_raw__{component_name}.sql

│ │ ├── intermediate/

│ │ │ └── int_{component_name}.sql

│ │ └── marts/

│ │ └── {destination_component_name}.sql

│ ├── macros/

│ │ ├── m_update_control_variable.sql

│ │ └── m_update_row_count_variable.sql

│ ├── seeds/

│ └── tests/

└── (additional packages...)/

SSIS to SnowConvert Conversion Mapping:

SSIS Data Flow Tasks → dbt projects (one per Data Flow Task)

SSIS Control Flow → Snowflake TASK objects or stored procedures

SSIS Variables → control_variables table + UDFs + DBT variables

SSIS Containers → Inline conversion within parent TASK/procedure

ETL Configuration Components¶

The etl_configuration/ folder contains shared infrastructure components required by all ETL orchestrations. These components work together to manage variables across package executions:

control_variables_table.sql: Creates a transient table to store package variables, parameters, and their values across orchestration executions

GetControlVariableUDF.sql: User-defined function to retrieve variable values from the control variables table

UpdateControlVariable.sql: Stored procedure to update variable values during orchestration execution

Schema Dependencies: The UDFs and stored procedures in the etl_configuration/ folder are generated with hardcoded schema references (default: public). If you deploy these objects to a different schema, you must update the schema references within:

The

GetControlVariableUDF.sqlfunction (referencespublic.control_variablesin the SELECT statement)The

UpdateControlVariable.sqlprocedure (referencespublic.control_variablesin the MERGE statement)Any orchestration scripts that call these objects

Common Naming and Sanitization Rules¶

SnowConvert applies consistent sanitization rules to all SSIS object names to ensure dbt and Snowflake compatibility. This includes packages, tasks, components, and variables.

Rule |

Description |

Example |

|---|---|---|

Convert to lowercase |

All names converted to lowercase |

|

Replace invalid characters |

Spaces, hyphens, and special characters become underscores |

|

Remove consecutive underscores |

Avoids |

|

Prefix with |

Adds prefix if name starts with a number |

|

Remove quotes and brackets |

Strips surrounding quotes and brackets |

|

These rules apply uniformly across all generated artifacts: dbt model names, Snowflake TASK names, procedure names, and variable identifiers.

Data Flow Task Output (dbt Projects)¶

Each SSIS Data Flow Task (https://learn.microsoft.com/en-us/sql/integration-services/data-flow/data-flow?view=sql-server-ver17) is converted into a standalone dbt project with a three-tier architecture. These dbt projects contain all the data transformation logic from your original SSIS packages.

Supported Data Flow Components: For a complete list of supported sources, transformations, and destinations, see the SSIS Translation Reference.

Layer Organization¶

Each dbt project follows a three-tier architecture that separates data extraction, transformation, and loading:

Layer |

Materialization |

Purpose |

|---|---|---|

models/staging/ |

View |

Provides clean, type-safe access to source data referenced in |

models/intermediate/ |

Ephemeral |

Contains transformation logic from source ETL (not persisted to database for performance) |

models/marts/ |

Incremental or Table |

Final, business-ready data models corresponding to target tables. If the target overrides data in the table or re-creates the table, it will be materialized as a table, otherwise it will be materialized as an incremental model. |

Materialization configuration:

Default materializations are defined in dbt_project.yml. However, individual models can override these defaults when needed:

Use

{{ config(materialized='view') }}to change a specific model’s materializationUse

{{ config(alias='...') }}in mart models to customize the final table name in Snowflake

dbt Model Naming Conventions¶

SnowConvert uses prefixes to indicate each model’s layer in the dbt project:

Model Type |

Naming Pattern |

Examples |

|---|---|---|

Staging |

|

|

Intermediate |

|

|

Mart |

|

|

The stg_raw__ prefix indicates a staging model that selects from a raw source, while the int_ prefix marks intermediate transformation models. Mart models use the destination table name directly or can specify a custom alias.

Important notes:

All component names are sanitized according to the naming rules above

Mart models become the final table names in Snowflake

You can customize mart table names using

{{ config(alias='TableName') }}

dbt Project Organization¶

Organization structure:

One dbt project per Data Flow Task (e.g.,

Process_Sales_Files_Load_Sales_Data/)Package-level folder contains the orchestration SQL file and all dbt project folders

Models organized by layer (staging, intermediate, marts) within each dbt project

Orchestration execution uses

EXECUTE DBT PROJECTcommands

sources.yml Configuration¶

The sources.yml file, located in the models/ directory, declares all source tables used by the dbt project. This file serves three key purposes:

Connection: Links dbt models to raw data tables in Snowflake

Documentation: Provides metadata and descriptions for source systems

Lineage: Enables tracking data flow from sources through transformations

Important: Before deploying your dbt project, replace the YOUR_SCHEMA and YOUR_DB placeholders with your actual Snowflake schema and database names.

dbt Macros¶

Each dbt project includes these macros:

Macro |

Purpose |

|---|---|

m_update_control_variable.sql |

Updates control variables from dbt models and syncs changes to orchestration |

m_update_row_count_variable.sql |

Captures row counts from transformations (similar to SSIS row count updates) |

Control Flow Task Output (Orchestration)¶

SSIS control flow logic (https://learn.microsoft.com/en-us/sql/integration-services/control-flow/control-flow?view=sql-server-ver17) is converted into Snowflake orchestration using TASK objects or stored procedures. This orchestration layer manages the execution sequence of your dbt projects and handles variables, containers, and package execution.

Supported Control Flow Elements: For a complete list of supported tasks and containers, see the SSIS Translation Reference.

Orchestration Naming Conventions¶

Orchestration objects follow consistent naming patterns based on the SSIS package and task names:

Object Type |

Naming Pattern |

Example |

|---|---|---|

Orchestration files |

|

|

Package initialization TASK |

|

|

Data Flow TASK |

|

|

Stored Procedure (reusable) |

|

|

Notes:

All names are sanitized according to the naming rules described earlier

Stored procedures are used when packages are called by at least one ExecutePackage task from another control flow

Orchestration Approach¶

Each SSIS package generates an orchestration SQL file. The conversion pattern depends on whether the package is reused:

Standard Packages (not called by ExecutePackage tasks)¶

Standard packages that are not called by ExecutePackage tasks from other control flows are converted to Snowflake TASK objects. Each package typically generates two types of TASKs:

Initialization TASK: Creates and refreshes control variables for the package

Deletes existing package variables from the

control_variablestableInserts all variables and parameters with their default values using

TO_VARIANT()

Main Orchestration TASKs: Contains the core control flow logic

Declared with

WAREHOUSE=DUMMY_WAREHOUSE(update this to your actual warehouse name)Uses the

AFTERclause to establish task dependenciesExecutes converted control flow and data flow tasks

Reusable Packages (called by ExecutePackage tasks)¶

Packages that are called by at least one ExecutePackage task from another control flow are converted to stored procedures instead of TASK objects. This is necessary because Snowflake TASK objects can’t be called synchronously from other tasks.

Key characteristics:

FDM generated: SSC-FDM-SSIS0005

Invocation:

CALL schema.ProcedureName(params)from parent orchestrationBenefits: Enables synchronous execution and can be called from multiple parent packages with different parameter values

Example orchestration structure:

CREATE OR REPLACE TASK public.Package AS

BEGIN

-- Initialize control variables

DELETE FROM public.control_variables WHERE variable_scope = 'Package';

INSERT INTO public.control_variables ...

END;

CREATE OR REPLACE TASK public.package_data_flow_task

WAREHOUSE=DUMMY_WAREHOUSE

AFTER public.package

AS

BEGIN

-- Declare LET variables from control table

LET User_Variable VARCHAR := public.GetControlVariableUDF('User_Variable', 'Package') :: VARCHAR;

-- Execute dbt project

EXECUTE DBT PROJECT public.My_DataFlow_Project ARGS='build --target dev';

-- Update control variables

CALL public.UpdateControlVariable('User_Variable', 'Package', TO_VARIANT(:User_Variable));

END;

Variable Management¶

SSIS variables are converted into a comprehensive management system using four interconnected mechanisms:

1. Control Variables Table¶

The control_variables table serves as the central storage for all package variables and parameters. Each variable is stored with the following metadata:

Field |

Type |

Description |

|---|---|---|

|

VARCHAR |

Variable name |

|

VARIANT |

Value (accommodates any data type) |

|

VARCHAR |

Original SSIS data type |

|

VARCHAR |

Package or container name |

|

BOOLEAN |

Distinguishes parameters from variables |

|

BOOLEAN |

Reserved for future use |

|

TIMESTAMP |

Last update time |

2. GetControlVariableUDF Function¶

This user-defined function retrieves variable values within TASK logic. Use it to read variable values from the control variables table:

LET MyVar VARCHAR := public.GetControlVariableUDF('MyVar', 'Package') :: VARCHAR;

3. UpdateControlVariable Procedure¶

This stored procedure updates variable values during orchestration execution. Use it to write variable changes back to the control variables table:

CALL public.UpdateControlVariable('MyVar', 'Package', TO_VARIANT(:MyVar));

4. dbt Macros¶

Each dbt project includes macros that enable variable operations from within dbt models:

m_update_control_variable.sql: Updates control variables and syncs changes back to the orchestration layerm_update_row_count_variable.sql: Captures row counts from transformations, similar to SSIS row count variable updates

Inline Container Conversion Approach¶

SnowConvert uses an inline conversion approach for SSIS containers (https://learn.microsoft.com/en-us/sql/integration-services/control-flow/control-flow?view=sql-server-ver17) rather than creating separate procedures. This architectural decision preserves execution context and simplifies the migration.

Why inline conversion?

Migrating SSIS isn’t just “translate this component to that component.” It’s untangling control flow, variables, and data movement that have lived together for years. Our inline approach preserves that context:

One place to debug: Containers and branches are converted inline inside parent Snowflake procedures or tasks. No bouncing across tools to understand why something ran (or didn’t).

Deterministic orchestration: Standalone packages become Snowflake Tasks with explicit dependencies. Packages called by ExecutePackage tasks become procedures for clean, synchronous reuse.

Fewer naming collisions: We consistently sanitize object names across dbt models, tasks, procedures, and variables, so deployments remain predictable in shared environments.

Familiar, but modern: Data movement and business logic land in dbt with layered models and macros, while orchestration runs natively on Snowflake. Same mental model as SSIS—without the engine lock-in.

What gets converted inline:

Sequence Containers (https://learn.microsoft.com/en-us/sql/integration-services/control-flow/sequence-container?view=sql-server-ver17) - Sequential task execution with marked boundaries

For Loop Containers (https://learn.microsoft.com/en-us/sql/integration-services/control-flow/for-loop-container?view=sql-server-ver17) - Container structure preserved, iteration logic requires manual implementation

ForEach Loop Containers (https://learn.microsoft.com/en-us/sql/integration-services/control-flow/foreach-loop-container?view=sql-server-ver17) - File enumerators converted to Snowflake stage operations, other types require manual work

Event Handlers (https://learn.microsoft.com/en-us/sql/integration-services/integration-services-ssis-event-handlers?view=sql-server-ver17) - Not supported; implement using Snowflake exception handling

For detailed conversion specifications, examples, and EWI/FDM references for all control flow elements and task conversions, see the SSIS Translation Reference.