Parameters¶

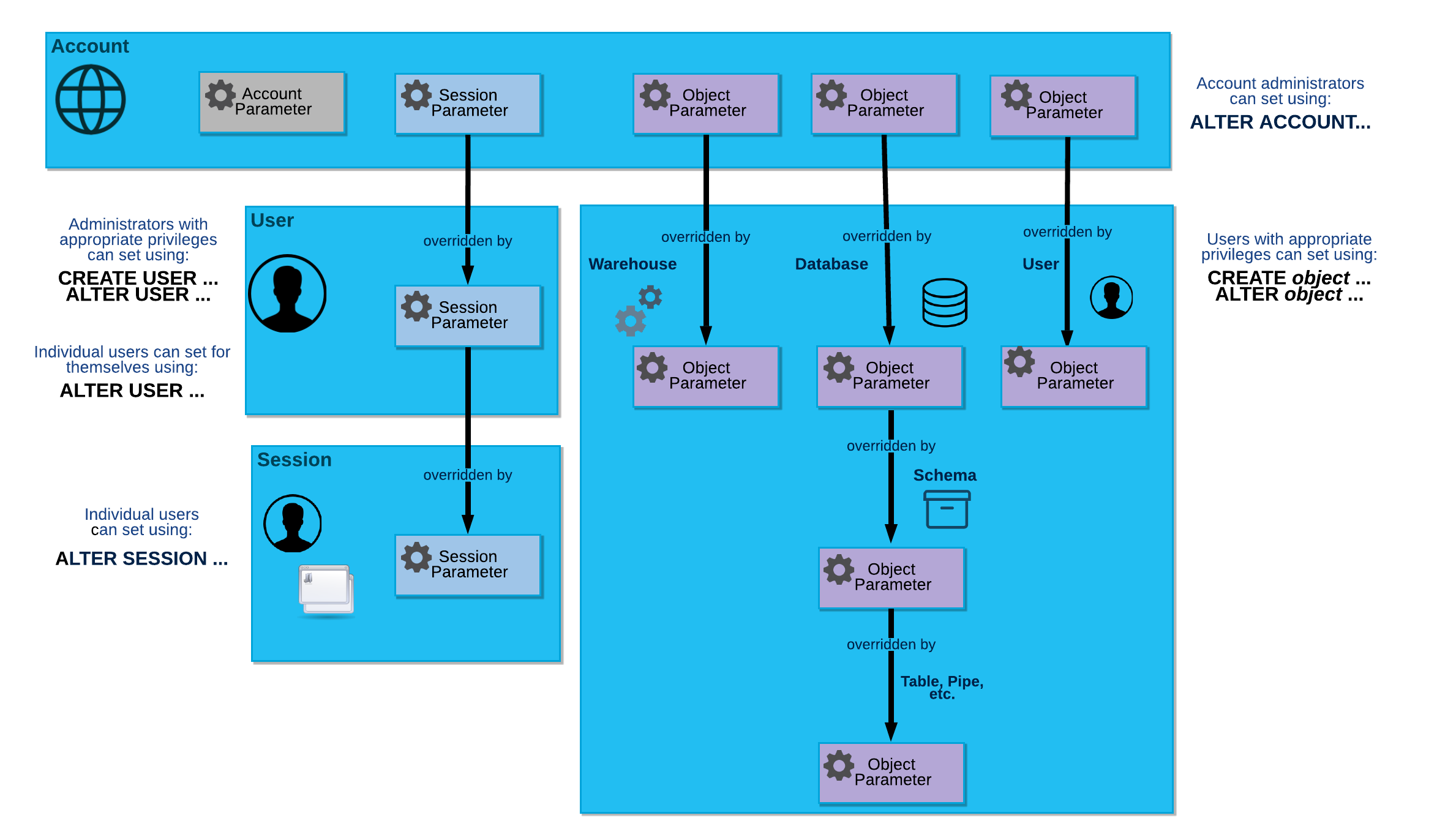

Snowflake provides parameters that let you control the behavior of your account, individual user sessions, and objects. All parameters have default values. You can set these parameters and override them at different levels, depending on the parameter type (account, session, or object).

Parameter hierarchy and types¶

This section describes the different types of parameters and the levels at which each type can be set. There are three types of parameters:

The following diagram illustrates the hierarchical relationship between the different parameter types and how individual parameters can be overridden at each level:

Account parameters¶

You can only set account parameters at the account level, if you are using a role that has been granted the privilege to set the parameter. To set an account parameter, you run the ALTER ACCOUNT command.

Snowflake provides the following account parameters:

Parameter |

Notes |

|---|---|

Used to allow clients to access bind variable values. |

|

Used to enable connection caching in browser-based single sign-on (SSO) for Snowflake-provided clients. |

|

Used to specify the workload types that are allowed in your account to deploy to Snowpark Container Services. |

|

Used for encryption of files staged for data loading or unloading; might require additional installation and configuration (see description for details). |

|

Used to enable cross-region processing of Snowflake Cortex calls in a different region if the call cannot be processed in your account region. |

|

Used to disable granting of privileges directly to users. For more information, see GRANT privileges to USERS Usage notes. |

|

Used to specify the workload types that are disallowed in your account to deploy to Snowpark Container Services. |

|

Controls whether events from sensitive data classification are logged to the user event table. |

|

Controls whether events from budgets are logged to the event table. |

|

Used to enable or disable listing auto-fulfillment egress cost egress optimization. |

|

Allows the SYSTEM$GET_PRIVATELINK_CONFIG function to return the |

|

Used to enable or disable private notebooks on a Snowflake account. |

|

ENABLE_SPCS_BLOCK_STORAGE_SNOWFLAKE_FULL_ENCRYPTION_ENFORCEMENT |

Used to enable enforcement of SNOWFLAKE_FULL encryption for Snowpark Container Services block-storage volumes and snapshots. |

Controls whether Snowflake collects telemetry data for tag propagation. |

|

Used to specify an image Repository’s choice to opt out of Tri-Secret Secure and Periodic rekeying. |

|

Used to set the refresh schedule for all listings in an account. |

|

Used to set the minimum data retention period for retaining historical data for Time Travel operations. |

|

This is the only account parameter that can be set by either account administrators (i.e users with the ACCOUNTADMIN system role) or security administrators (i.e users with the SECURITYADMIN system role). . For more information, see Object parameters. |

|

Used to specify whether to capture the SQL text of a traced SQL statement. |

|

Used to enable or disable Workspaces as the default SQL editor for the account. |

Note

By default, account parameters are not displayed in the output of SHOW PARAMETERS. For information about viewing account parameters, see Viewing the Parameters and Their Values (in this topic).

Session parameters¶

Most parameters are session parameters, which you can set at the following levels:

- Account:

Account administrators can run the ALTER ACCOUNT command to set session parameters for the account.

The values that you set at this level become the default values for individual users and their sessions.

- User:

Administrators with the appropriate privileges (typically, a user who has been granted the SECURITYADMIN role) can run the ALTER USER command to override session parameters for individual users. In addition, individual users can run the ALTER USER command to override default sessions parameters for themselves.

The values set that you set for a user become the default values in any session started by that user.

- Session:

Users can run the ALTER SESSION command to override session parameters for the current session.

Note

By default, only session parameters are displayed in the output of SHOW PARAMETERS. For information about viewing account and object parameters, see Viewing the Parameters and Their Values (in this topic).

Object parameters¶

You can set object parameters at the following levels:

- Account:

Account administrators can run the ALTER ACCOUNT command to set object parameters for objects in the account.

The values that you set at this level become the default values for individual objects created in the account.

- Object:

Users with the appropriate privileges can run the CREATE <object> or ALTER <object> commands to override object parameters for an individual object.

Snowflake provides the following object parameters:

Parameter |

Object Type |

Notes |

|---|---|---|

Snowflake Scripting stored procedure |

||

Database, Schema |

Specifies a prefix to use in the write path for Apache Iceberg™ table files. |

|

Database, Schema, Apache Iceberg™ table |

||

Account, Database, Schema, Apache Iceberg™ table |

This parameter is only supported for Snowflake-managed Iceberg tables that you sync with Open Catalog. |

|

Cortex AI Functions and models |

Comma-separated names of allowed Cortex language models, |

|

Table |

Specifies the schedule to run the data metric functions associated to the table. All data metric functions on the table or view follow the same schedule. |

|

Database, Schema, Table |

||

Database, Schema, Table |

||

Database, Schema |

||

Database, Schema |

||

Account, Database, Schema |

||

Account, User |

||

Account, Database, Schema, Apache Iceberg™ table |

This parameter is only supported for Snowflake-managed Iceberg tables. |

|

User |

Affects the query history for queries that fail due to syntax or parsing errors. |

|

User |

Affects redaction of error messages related to secure objects in metadata. |

|

Database, Account |

||

Database, Schema, Apache Iceberg™ table |

||

Account, Database, Schema, Stored Procedure, Function, Dynamic Table, Iceberg table, Task, Service. |

||

Warehouse |

||

Database, Schema, Table |

||

Account, Database, Schema, Stored Procedure, Function |

||

User |

This is the only user parameter that can be set by either account administrators (users with the ACCOUNTADMIN system role) or security administrators (users with the SECURITYADMIN system role). If this parameter is set on the account and a user in the same account, the user-level network policy overrides the account-level network policy. |

|

Apache Iceberg™ table |

Specifies the path layout for Parquet data files written to partitioned Iceberg tables. |

|

Schema, Pipe |

||

User |

||

User |

||

Database, Schema, file format, Apache Iceberg™ table |

Can only be set for Iceberg tables that use an external Iceberg catalog. |

|

|

Database, Schema, Table |

Use this parameter to enable row timestamps on your tables. For more information, see Use row timestamps to measure latency in your pipelines. |

|

Database, Schema, Table |

Use this parameter to set row timestamps by default for new tables in a container. For more information, see Use row timestamps to measure latency in your pipelines. |

Database, Schema, Task, Account |

||

Database, Schema, Task, Account |

||

Warehouse |

Also a session parameter (can be set at both the object and session levels). For inheritance and override details, see the parameter description. |

|

Warehouse |

Also a session parameter (can be set at both the object and session levels). For inheritance and override details, see the parameter description. |

|

Database, Schema, Apache Iceberg™ table |

This parameter is only supported for Iceberg tables that use Snowflake as the catalog. |

|

Database, Schema, Task |

||

Database, Schema, Task |

||

Account, Database, Schema, Stored Procedure, Function |

||

Database, Schema, Task |

||

Database, Schema, Task |

||

Database, Schema, Task |

Note

By default, object parameters are not displayed in the output of SHOW PARAMETERS. For information about viewing object parameters, see Viewing the Parameters and Their Values (in this topic).

Viewing the parameters and their values¶

To view the parameters that are set and their default values, run the SHOW PARAMETERS command. You can run the command with different command parameters to display different types of parameter:

Viewing session parameters¶

By default, the command displays only session parameters:

SHOW PARAMETERS;

Viewing object parameters¶

To display the object parameters for a specific object, include the IN clause with the object type and name. For example:

SHOW PARAMETERS IN DATABASE mydb;

SHOW PARAMETERS IN WAREHOUSE mywh;

Viewing all parameters (including account and object parameters)¶

To display all parameters, including account and object parameters, include the IN ACCOUNT clause:

SHOW PARAMETERS IN ACCOUNT;

Limiting the list of parameters by name¶

You can specify the LIKE clause to limit the list of parameters by name. For example:

To display the session parameters with names containing “time”:

SHOW PARAMETERS LIKE '%time%';

To display all the parameters with names starting with “time”:

SHOW PARAMETERS LIKE 'time%' IN ACCOUNT;

Note

You must specify the LIKE clause before the IN clause.

ABORT_DETACHED_QUERY¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Description:

Specifies the action that Snowflake performs for in-progress queries if connectivity is lost due to abrupt termination of a session (e.g. network outage, browser termination, service interruption).

- Values:

TRUE: In-progress queries are aborted 5 minutes after connectivity is lost.FALSE: In-progress queries are completed.- Default:

FALSE

Note

For client drivers, closing the connection from the client side (such as calling

connection.close()) is different from actually logging out from the Snowflake session. Closing the connection can be associated with cleaning up resources owned by the connection, including but not limited to performing a session logout. Performing a session logout also implies that any queries still running in the same session (for example, queries submitted asynchronously) are canceled after a couple of minutes when the session is logged out, even if the ABORT_DETACHED_QUERY parameter is set tofalse(the default value).Therefore, some Snowflake drivers implement their own business logic to decide whether session logout is performed when the connection is closed.

Currently, this functionality is implemented in the following drivers:

Go Snowflake Driver (https://pkg.go.dev/github.com/snowflakedb/gosnowflake#hdr-Asynchronous_Queries)

Most queries require compute resources in order to be executed. These resources are provided by virtual warehouses, which consume credits while running. If the Snowflake session is not terminated when the connection closes, warehouses might continue running and consuming credits to complete any queries that were in progress at the time the connection was closed, up to the value of the STATEMENT_TIMEOUT_IN_SECONDS parameter, which has a default of two days.

ACTIVE_PYTHON_PROFILER¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

String (Constant)

- Description:

Sets the profiler to use for the session when profiling Python handler code.

- Values:

'LINE': To have the profile focus on line use activity.'MEMORY': To have the profile focus on memory use activity.- Default:

None.

ALLOW_BIND_VALUES_ACCESS¶

- Type:

Account — Can only be set for Account

- Data Type:

Boolean

- Description:

Specifies whether clients can access bind variable values by using the BIND_VALUES table function, the QUERY_HISTORY Account Usage view, the QUERY_HISTORY Organization Usage view, or the QUERY_HISTORY function. For more information, see Retrieve bind variable values.

- Values:

TRUE: Allows the retrieval of bind variable values.FALSE: Doesn’t allow retrieval of bind variable values.- Default:

TRUE

ALLOW_CLIENT_MFA_CACHING¶

- Type:

Account — Can only be set for Account

- Data Type:

Boolean

- Description:

Specifies whether an MFA token can be saved in the client-side operating system keystore to promote continuous, secure connectivity without users needing to respond to an MFA prompt at the start of each connection attempt to Snowflake. For details and the list of supported Snowflake-provided clients, see Using MFA token caching to minimize the number of prompts during authentication — optional.

- Values:

TRUE: Stores an MFA token in the client-side operating system keystore to enable the client application to use the MFA token whenever a new connection is established. While true, users are not prompted to respond to additional MFA prompts.FALSE: Does not store an MFA token. Users must respond to an MFA prompt whenever the client application establishes a new connection with Snowflake.- Default:

FALSE

ALLOW_ID_TOKEN¶

- Type:

Account — Can be set only for Account

- Data Type:

Boolean

- Description:

Specifies whether a connection token can be saved in the client-side operating system keystore to promote continuous, secure connectivity without users needing to enter login credentials at the start of each connection attempt to Snowflake. For details and the list of supported Snowflake-provided clients, see Using connection caching to minimize the number of prompts for authentication — Optional.

- Values:

TRUE: Stores a connection token in the client-side operating system keystore to enable the client application to perform browser-based SSO without prompting users to authenticate whenever a new connection is established.FALSE: Does not store a connection token. Users are prompted to authenticate whenever the client application establishes a new connection with Snowflake. SSO to Snowflake is still possible if this parameter is set to false.- Default:

FALSE

ALLOWED_SPCS_WORKLOAD_TYPES¶

- Type:

Account — Can be set only for Account

- Data Type:

String

- Description:

Specifies the workload types that are allowed in your account to deploy to Snowpark Container Services. Also see DISALLOWED_SPCS_WORKLOAD_TYPES.

- Values:

The value is a comma-separated list of the following supported workload types:

USER: Any workloads directly deployed by users.NOTEBOOK: Snowflake Notebooks.STREAMLIT: Streamlit in Snowflake.MODEL_SERVING: ML Model Serving.ML_JOB: Snowflake ML Jobs.ALL: All workloads.

- Default:

ALL

Note

If you configure both ALLOWED_SPCS_WORKLOAD_TYPES and DISALLOWED_SPCS_WORKLOAD_TYPES, DISALLOWED_SPCS_WORKLOAD_TYPES takes precedence. For example, if you configure both these parameters and specify the NOTEBOOK workload, NOTEBOOK workloads aren’t allowed to run on Snowpark Container Services.

AUTO_EVENT_LOGGING¶

- Type:

Object (for Snowflake Scripting stored procedures)

- Data Type:

String (Constant)

- Description:

Controls whether Snowflake Scripting log messages and trace events are ingested automatically into the event table. To set this parameter, run the ALTER PROCEDURE command.

- Values:

LOGGING: Automatically adds the following additional logging information to the event table when a procedure is executed:BEGIN/END of a Snowflake Scripting block.

BEGIN/END of a child job request.

This information is added to the event table only if the effective LOG_LEVEL is set to

TRACEfor the stored procedure.TRACING: Automatically adds the following additional trace information to the event table when a stored procedure is executed:Exception catching.

Information about child job execution.

Child job statistics.

Stored procedure statistics, including execution time and input values.

This information is added to the event table only if the effective TRACE_LEVEL is set to

ALWAYSorON_EVENTfor the stored procedure.ALL: Automatically adds both the logging information added for theLOGGINGvalue and the trace information added for theTRACINGvalue.OFF: Does not automatically add logging information or trace information to the event table.

- Default:

OFF

For more information about using this parameter, see Setting levels for logging, metrics, and tracing, Automatically add log messages about blocks and child jobs, and Automatically emit trace events for child jobs and exceptions.

AUTOCOMMIT¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Description:

Specifies whether autocommit is enabled for the session. Autocommit determines whether a DML statement, when executed without an active transaction, is automatically committed after the statement successfully completes. For more information, see Transactions.

Note

Setting this parameter to

FALSEstops usage data from being saved to the ORGANIZATION_USAGE schema of an organization account.- Values:

TRUE: Autocommit is enabled.FALSE: Autocommit is disabled, meaning DML statements must be explicitly committed or rolled back.- Default:

TRUE

Note

The FALSE value isn’t supported for tasks.

AUTOCOMMIT_API_SUPPORTED (view-only)¶

- Type:

N/A

- Data Type:

Boolean

- Description:

For Snowflake internal use only. View-only parameter that indicates whether API support for autocommit is enabled for your account. If the value is

TRUE, you can enable or disable autocommit through the APIs for the following drivers/connectors:

BASE_LOCATION_PREFIX¶

- Type:

Object (for databases and schemas) — Can be set for Account » Database » Schema

- Data Type:

String

- Description:

Specifies a prefix for Snowflake to use in the write path for Snowflake-managed Apache Iceberg™ tables. For more information, see data and metadata directories for Iceberg tables.

- Values:

Any valid string prefix that complies with the storage naming conventions of your cloud provider.

- Default:

None

BINARY_INPUT_FORMAT¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

String (Constant)

- Description:

The format of VARCHAR values passed as input to VARCHAR-to-BINARY conversion functions. For more information, see Binary input and output.

- Values:

HEX,BASE64, orUTF8/UTF-8- Default:

HEX

BINARY_OUTPUT_FORMAT¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

String (Constant)

- Description:

The format for VARCHAR values returned as output by BINARY-to-VARCHAR conversion functions. For more information, see Binary input and output.

- Values:

HEXorBASE64- Default:

HEX

CATALOG¶

- Type:

Object (for databases, schemas, and Apache Iceberg™ tables) — Can be set for Account » Database » Schema » Iceberg table

- Data Type:

String

- Description:

Specifies the catalog for Apache Iceberg™ tables. For more information, see the Iceberg table documentation.

- Values:

SNOWFLAKEor any valid catalog integration identifier.- Default:

None

CATALOG_SYNC¶

- Type:

Object (for databases, schemas, and Iceberg tables) — Can be set for Account » Database » Schema » Iceberg Table

- Data Type:

String

- Description:

Specifies the name of your catalog integration for Snowflake Open Catalog. Snowflake syncs tables that use the specified catalog integration with your Snowflake Open Catalog account. For more information, see Sync a Snowflake-managed table with Snowflake Open Catalog.

- Values:

The name of any existing catalog integration for Open Catalog.

- Default:

None

CLIENT_ENABLE_LOG_INFO_STATEMENT_PARAMETERS¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Clients:

JDBC

- Description:

Enables users to log the data values bound to PreparedStatements.

To see the values, you must not only set this session-level parameter to

TRUE, but also set the connection parameter namedTRACINGto eitherINFOorALL.Set

TRACINGtoALLto see all debugging information and all binding information.Set

TRACINGtoINFOto see the binding parameter values and less other debug information.

Caution

If you bind confidential information, such as medical diagnoses or passwords, that information is logged. Snowflake recommends making sure that the log file is secure, or only using test data, when you set this parameter to

TRUE.- Values:

TRUEorFALSE.- Default:

FALSE

CLIENT_ENCRYPTION_KEY_SIZE¶

- Type:

Account — Can be set only for Account

- Data Type:

Integer

- Clients:

Any

- Description:

Specifies the AES encryption key size, in bits, used by Snowflake to encrypt/decrypt files stored on internal stages (for loading/unloading data) when you use the

SNOWFLAKE_FULLencryption type.- Values:

128or256- Default:

128

Note

This parameter is not used for encrypting/decrypting files stored in external stages (that is, S3 buckets or Azure containers). Encryption/decryption of these files is accomplished using an external encryption key explicitly specified in the COPY command or in the named external stage referenced in the command.

If you are using the JDBC driver and you wish to set this parameter to 256 (for strong encryption), additional JCE policy files must be installed on each client machine from which data is loaded/unloaded. For more information about installing the required files, see Java requirements for the JDBC Driver.

If you are using the Python connector (or SnowSQL) and you wish to set this parameter to 256 (for strong encryption), no additional installation or configuration tasks are required.

CLIENT_MEMORY_LIMIT¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Integer

- Clients:

JDBC, ODBC

- Description:

Parameter that specifies the maximum amount of memory the JDBC driver or ODBC driver should use for the result set from queries (in MB).

For the JDBC driver:

To simplify JVM memory management, the parameter sets a global maximum memory usage limit for all queries.

CLIENT_RESULT_CHUNK_SIZE specifies the maximum size of each set (or chunk) of query results to download (in MB). The driver might require additional memory to process a chunk; if so, it will adjust memory usage during runtime to process at least one thread/query. Verify that CLIENT_MEMORY_LIMIT is set significantly higher than CLIENT_RESULT_CHUNK_SIZE to ensure sufficient memory is available.

For the ODBC driver:

This parameter is supported in version 2.22.0 and higher.

CLIENT_RESULT_CHUNK_SIZEis not supported.

Note

The driver will attempt to honor the parameter value, but will cap usage at 80% of your system memory.

The memory usage limit set in this parameter does not apply to any other JDBC or ODBC driver operations (e.g. connecting to the database, preparing a query, or PUT and GET statements).

- Values:

Any valid number of megabytes.

- Default:

1536(effectively 1.5 GB)Most users should not need to set this parameter. If this parameter is not set by the user, the driver starts with the default specified above.

In addition, the JDBC driver actively manages its memory conservatively to avoid using up all available memory.

CLIENT_METADATA_REQUEST_USE_CONNECTION_CTX¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Clients:

JDBC, ODBC

- Description:

For specific ODBC functions and JDBC methods, this parameter can change the default search scope from all databases/schemas to the current database/schema. The narrower search typically returns fewer rows and executes more quickly.

For example, the

getTables()JDBC method accepts a database name and schema name as arguments, and returns the names of the tables in the database and schema. If the database and schema arguments arenull, then by default, the method searches all databases and all schemas in the account. Setting CLIENT_METADATA_REQUEST_USE_CONNECTION_CTX toTRUEnarrows the search to the current database and schema specified by the connection context.In essence, setting this parameter to

TRUEcreates the following precedence for database and schema:Values passed as arguments to the functions/methods.

Values specified in the connection context (if any).

Default (all databases and all schemas).

For more details, see the information below.

This parameter applies to the following:

JDBC driver methods (for the

DatabaseMetaDataclass):getColumnsgetCrossReferencegetExportedKeysgetForeignKeysgetFunctionsgetImportedKeysgetPrimaryKeysgetSchemasgetTables

ODBC driver functions:

SQLTablesSQLColumnsSQLPrimaryKeysSQLForeignKeysSQLGetFunctionsSQLProcedures

- Values:

TRUE: If the database and schema arguments arenull, then the driver retrieves metadata for only the database and schema specified by the connection context.The interaction is described in more detail in the table below.

FALSE: If the database and schema arguments arenull, then the driver retrieves metadata for all databases and schemas in the account.- Default:

FALSE- Additional Notes:

The connection context refers to the current database and schema for the session, which can be set using any of the following options:

Specify the default namespace for the user who connects to Snowflake (and initiates the session). This can be set for the user through the CREATE USER or ALTER USER command, but must be set before the user connects.

Specify the database and schema when connecting to Snowflake through the driver.

Issue a USE DATABASE or USE SCHEMA command within the session.

If the database or schema was specified by more than one of these, then the most recent one applies.

When CLIENT_METADATA_REQUEST_USE_CONNECTION_CTX is set to

TRUE:database argument

schema argument

Database used

Schema used

Non-null

Non-null

Argument

Argument

Non-null

Null

Argument

All schemas

Null

Non-null

Connection context

Argument

Null

Null

Connection context

Session context

Note

For the JDBC driver, this behavior applies to version 3.6.27 (and higher). For the ODBC driver, this behavior applies to version 2.12.96 (and higher).

If you want to search only the connection context database, but want to search all schemas within that database, see CLIENT_METADATA_USE_SESSION_DATABASE.

CLIENT_METADATA_USE_SESSION_DATABASE¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Clients:

JDBC

- Description:

This parameter applies to only the methods affected by CLIENT_METADATA_REQUEST_USE_CONNECTION_CTX.

This parameter applies only when both of the following conditions are met:

CLIENT_METADATA_REQUEST_USE_CONNECTION_CTX is

FALSEor unset.No database or schema is passed to the relevant ODBC function or JDBC method.

For specific ODBC functions and JDBC methods, this parameter can change the default search scope from all databases to the current database. The narrower search typically returns fewer rows and executes more quickly.

For more details, see the information below.

- Values:

TRUE:The driver searches all schemas in the connection context’s database. (For more details about the connection context, see the documentation for CLIENT_METADATA_REQUEST_USE_CONNECTION_CTX.)

FALSE:The driver searches all schemas in all databases.

- Default:

FALSE- Additional Notes:

When the database is null and the schema is null and CLIENT_METADATA_REQUEST_USE_CONNECTION_CTX is FALSE:

CLIENT_METADATA_USE_SESSION_DATABASE

Behavior

FALSE

All schemas in all databases are searched.

TRUE

All schemas in the current database are searched.

CLIENT_PREFETCH_THREADS¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Integer

- Clients:

JDBC, ODBC, Python, .NET

- Description:

Parameter that specifies the number of threads used by the client to pre-fetch large result sets. The driver will attempt to honor the parameter value, but defines the minimum and maximum values (depending on your system’s resources) to improve performance.

- Values:

1to10- Default:

4Most users should not need to set this parameter. If this parameter is not set by the user, the driver starts with the default specified above, but also actively manages its thread count conservatively to avoid using up all available memory.

CLIENT_RESULT_CHUNK_SIZE¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Integer

- Clients:

JDBC, Node.js, SQL API, Go

- Description:

Parameter that specifies the maximum size of each set (or chunk) of query results to download (in MB). The JDBC driver downloads query results in chunks.

Also see CLIENT_MEMORY_LIMIT.

- Values:

16to160- Default:

160Most users should not need to set this parameter. If this parameter is not set by the user, the driver starts with the default specified above, but also actively manages its memory conservatively to avoid using up all available memory.

CLIENT_RESULT_COLUMN_CASE_INSENSITIVE¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Clients:

JDBC

- Description:

Parameter that indicates whether to match column name case-insensitively in

ResultSet.get*methods in JDBC.- Values:

TRUE: matches column names case-insensitively.FALSE: matches column names case-sensitively.- Default:

FALSE

CLIENT_SESSION_KEEP_ALIVE¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Clients:

.NET, Golang, JDBC, Node.js, ODBC, Python,

- Description:

Parameter that indicates whether to force a user to log in again after a period of inactivity in the session.

- Values:

TRUE: Snowflake keeps the session active indefinitely as long as the connection is active, even if there is no activity from the user.FALSE: The user must log in again after four hours of inactivity.- Default:

FALSE

Note

Currently, the parameter only takes effect while initiating the session. You can modify the parameter value within the session level by executing an ALTER SESSION command, but it does not affect the session keep-alive functionality, such as extending the session. For information about setting the parameter at the session level, see the client documentation:

CLIENT_SESSION_KEEP_ALIVE_HEARTBEAT_FREQUENCY¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Integer

- Clients:

SnowSQL, JDBC, Python, Node.js

- Description:

Number of seconds in-between client attempts to update the token for the session.

- Values:

900to3600- Default:

3600

CLIENT_TIMESTAMP_TYPE_MAPPING¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

String (Constant)

- Clients:

Any

- Description:

Specifies the TIMESTAMP_* variation to use when binding timestamp variables for JDBC or ODBC applications that use the bind API to load data.

- Values:

TIMESTAMP_LTZorTIMESTAMP_NTZ- Default:

TIMESTAMP_LTZ

CORTEX_MODELS_ALLOWLIST¶

- Type:

Account — Can be set only for Account

- Data Type:

String

- Description:

Specifies the models that users in the account can access. Use this parameter to allowlist models for all users in the account. If you need to provide specific users with access beyond what you’ve specified in the allowlist, use role-based access control instead. For more information, see Account-level allowlist parameter.

When users make a request, Snowflake Cortex evaluates the parameter to determine whether the user can access the model.

- Values:

'All': Provides access to all models, including fine-tuned models.Example:

ALTER ACCOUNT SET CORTEX_MODELS_ALLOWLIST = 'All';

'model1,model2,...': Provides access to the models specified in a comma-separated list.Example:

ALTER ACCOUNT SET CORTEX_MODELS_ALLOWLIST = 'mistral-large2,llama3.1-70b';

'None': Prevents access to any model.Example:

ALTER ACCOUNT SET CORTEX_MODELS_ALLOWLIST = 'None';

- Default:

'All'

CORTEX_ENABLED_CROSS_REGION¶

- Type:

Account — Can be set only for Account

- Data Type:

String

- Description:

Specifies the regions where an inference request may be processed in case the request cannot be processed in the region where request is originally placed. Specifying

DISABLEDdisables cross-region inferencing. For examples and details, see Cross-region inference.- Values:

This parameter can be set to one of the following:

DISABLEDANY_REGIONComma-separated list including one or more of the following values:

AWS_APJAWS_AUAWS_EUAWS_USAZURE_EUAZURE_USGCP_US

Explanation of each parameter value¶ Value

Behavior

DISABLEDInference requests will be handled in:

The region where the request is placed.

ANY_REGIONInference requests may be routed to:

Any region that supports cross-region inference (listed in this table) and that has availability, including the region where the request is placed.

AWS_APJInference requests will be handled in the region where the request is placed and in the following AWS regions

AWS Asia Pacific (Tokyo) ap-northeast-1

AWS Asia Pacific (Seoul) ap-northeast-2

AWS Asia Pacific (Osaka) ap-northeast-3

AWS Asia Pacific (Mumbai) ap-south-1

AWS Asia Pacific (Hyderabad) ap-south-2

AWS Asia Pacific (Singapore) ap-southeast-1

AWS Asia Pacific (Sydney) ap-southeast-2

AWS Asia Pacific (Melbourne) ap-southeast-4

AWS_AUInference requests will be handled in the region where the request is placed and in the following AWS regions

AWS Asia Pacific (Sydney) ap-southeast-2

AWS Asia Pacific (Melbourne) ap-southeast-4

AWS_EUInference requests will be handled in the region where the request is placed and in the following AWS regions, which are (and will be) located within the European Union:

AWS Europe (Frankfurt) eu-central-1

AWS Europe (Stockholm) eu-north-1

AWS Europe (Milan) eu-south-1

AWS Europe (Spain) eu-south-2

AWS Europe (Ireland) eu-west-1

AWS Europe (Paris) eu-west-3

AWS_USInference requests will be handled in the region where the request is placed and in the following AWS regions, which are (and will be) located within the United States:

AWS US East (N. Virginia) us-east-1

AWS US East (Ohio) us-east-2

AWS US West (Oregon) us-west-2

AZURE_EUInference requests will be handled in the region where the request is placed and in the following Azure regions, which are (and will be) located within the European Union:

Azure Europe (Netherlands) westeurope

Azure Europe (France) francecentral

Azure Europe (Germany) germanywestcentral

Azure Europe (Italy) italynorth

Azure Europe (Poland) polandcentral

Azure Europe (Spain) spaincentral

Azure Europe (Sweden) swedencentral

AZURE_USInference requests will be handled in the region where the request is placed and in the following Azure regions, which are (and will be) located within the United States:

Azure US (Virginia) eastus2

Azure US (Virginia) eastus

Azure US (California) westus

Azure US (Phoenix) westus3

Azure US (Illinois) northcentralus

Azure US (Texas) southcentralus

GCP_USInference requests will be handled in the region where the request is placed and in the following GCP regions, which are (and will be) located within the United States:

GCP US (Iowa) us-central1

GCP US (Oregon) us-west1

GCP US (Las Vegas) us-west4

GCP US (N. Virginia) us-east4

- Default:

DISABLED

CSV_TIMESTAMP_FORMAT¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

String

- Description:

Specifies the format for TIMESTAMP values in CSV files downloaded from Snowsight.

If this parameter is not set, TIMESTAMP_LTZ_OUTPUT_FORMAT will be used for TIMESTAMP_LTZ values, TIMESTAMP_TZ_OUTPUT_FORMAT will be used for TIMESTAMP_TZ and TIMESTAMP_NTZ_OUTPUT_FORMAT for TIMESTAMP_NTZ values.

For more information, see Date and time input and output formats or Download your query results.

- Values:

Any valid, supported timestamp format.

- Default:

No value.

DATA_METRIC_SCHEDULE¶

- Type:

Object (for tables)

- Data type:

String

- Description:

Specifies the schedule to run the data metric functions associated to the table.

- Values:

The schedule can be based on a defined number of minutes, a cron expression, or a DML event on the table that does not involve reclustering. For details, see:

- Default:

60 MINUTE

DATA_RETENTION_TIME_IN_DAYS¶

- Type:

Object (for databases, schemas, and tables) — Can be set for Account » Database » Schema » Table

- Data Type:

Integer

- Description:

Number of days for which Snowflake retains historical data for performing Time Travel actions (SELECT, CLONE, UNDROP) on the object. A value of

0effectively disables Time Travel for the specified database, schema, or table. For more information, see Understanding & using Time Travel.- Values:

0or1(for Standard Edition)0to90(for Enterprise Edition or higher)- Default:

1

DATE_INPUT_FORMAT¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

String

- Description:

Specifies the input format for the DATE data type. For more information, see Date and time input and output formats.

- Values:

Any valid, supported date format or

AUTO(

AUTOspecifies that Snowflake attempts to automatically detect the format of dates stored in the system during the session)- Default:

AUTO

DATE_OUTPUT_FORMAT¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

String

- Description:

Specifies the display format for the DATE data type. For more information, see Date and time input and output formats.

- Values:

Any valid, supported date format

- Default:

YYYY-MM-DD

DEFAULT_DDL_COLLATION¶

- Type:

Object (for databases, schemas, and tables) — Can be set for Account » Database » Schema » Table

- Data Type:

String

- Description:

Sets the default collation used for the following DDL operations:

ALTER TABLE … ADD COLUMN

Setting this parameter forces all subsequently created columns in the affected objects (table, schema, database, or account) to have the specified collation as the default, unless the collation for the column is explicitly defined in the DDL.

For example, if

DEFAULT_DDL_COLLATION = 'en-ci', then the following two statements are equivalent:CREATE TABLE test(c1 INTEGER, c2 STRING, c3 STRING COLLATE 'en-cs'); CREATE TABLE test(c1 INTEGER, c2 STRING COLLATE 'en-ci', c3 STRING COLLATE 'en-cs');

Note

This parameter isn’t supported for dynamic tables and Apache Iceberg™ tables. This parameter isn’t supported on indexed columns for hybrid tables.

- Values:

Any valid, supported collation specification.

- Default:

Empty string

Note

To set the default collation for the account, use the following command:

The default collation for table columns can be set at the table, schema, or database level during creation or any time afterwards:

DEFAULT_NOTEBOOK_COMPUTE_POOL_CPU¶

- Type:

Object (for databases and schemas) — Can be set for Account » Database » Schema

- Data Type:

String

- Description:

Sets the preferred CPU compute pool used for Notebooks on CPU Container Runtime.

- Values:

Name of a compute pool in your account.

- Default:

SYSTEM_COMPUTE_POOL_CPU (see System compute pools).

DEFAULT_NOTEBOOK_COMPUTE_POOL_GPU¶

- Type:

Object (for databases and schemas) — Can be set for Account » Database » Schema

- Data Type:

String

- Description:

Sets the preferred GPU compute pool used for Notebooks on GPU Container Runtime.

- Values:

Name of a compute pool in your account.

- Default:

SYSTEM_COMPUTE_POOL_GPU (see System compute pools).

DEFAULT_NULL_ORDERING¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

String

- Description:

Specifies the default ordering of NULL values in a result set.

The ordering of NULL values in rows depend on the ORDER BY clause:

When the sort order is ASC (the default) and this parameter is set to

LAST(the default), NULL values are returned last. Therefore, unless specified otherwise, NULL values are considered to be higher than any non-NULL values.When the sort order is ASC and this parameter is set to

FIRST, NULL values are returned first.When the sort order is DESC and this parameter is set to

FIRST, NULL values are returned last.When the sort order is DESC and this parameter is set to

LAST, NULL values are returned first.

If a NULL ordering is specified in the ORDER BY clause with NULLS FIRST or NULLS LAST, then the specified ordering takes precedence over any value of DEFAULT_NULL_ORDERING.

- Values:

FIRST: NULL values are lower than any non-NULL values.LAST: NULL values are higher than any non-NULL values.- Default:

LAST

DEFAULT_STREAMLIT_NOTEBOOK_WAREHOUSE¶

- Type:

Object (for databases and schemas) — Can be set for Account » Database » Schema

- Data Type:

String

- Description:

Specifies the name of the default warehouse to use when creating a notebook.

For more information, see ALTER ACCOUNT, ALTER DATABASE, and ALTER SCHEMA.

- Values:

The name of any existing warehouse.

- Default:

SYSTEM$STREAMLIT_NOTEBOOK_WH

DISABLE_USER_PRIVILEGE_GRANTS¶

- Type:

Object (for users) — Can be set only for Account

- Data Type:

Boolean

- Description:

Controls whether users in an account can grant privileges directly to other users.

Disabling user privilege grants (that is, setting DISABLE_USER_PRIVILEGE_GRANTS to

TRUE) doesn’t affect existing grants to users. Existing grants to users continue to confer privileges to those users. For more information, see GRANT <privileges> … TO USER.- Values:

TRUE: Users in the account cannot grant privileges to another user.FALSE: Users in the account can grant privileges to another user.- Default:

FALSE

DISALLOWED_SPCS_WORKLOAD_TYPES¶

- Type:

Account — Can be set only for Account

- Data Type:

String

- Description:

Specifies the workload types that are disallowed in your account to deploy to Snowpark Container Services. Also see ALLOWED_SPCS_WORKLOAD_TYPES.

- Values:

The value is a comma-separated list of the following supported workload types:

USER: Any workloads directly deployed by users.NOTEBOOK: Snowflake Notebooks.STREAMLIT: Streamlit in Snowflake.MODEL_SERVING: ML Model Serving.ML_JOB: Snowflake ML Jobs.ALL: All workloads.

- Default:

Empty string

Note

If you configure both DISALLOWED_SPCS_WORKLOAD_TYPES and ALLOWED_SPCS_WORKLOAD_TYPES parameters, Snowflake first applies DISALLOWED_SPCS_WORKLOAD_TYPES. For example, if you configure both these parameters and specify the NOTEBOOK workload, NOTEBOOK workloads are not allowed to run on Snowpark Container Services.

ENABLE_AUTOMATIC_SENSITIVE_DATA_CLASSIFICATION_LOG¶

- Type:

Account — Can be set only for Account

- Data Type:

Boolean

- Description:

Controls whether events from sensitive data classification are logged in the user event table.

- Values:

TRUE: Snowflake logs events for sensitive data classification in the user event table.FALSE: Events for sensitive data classification are not logged.- Default:

TRUE

ENABLE_BUDGET_EVENT_LOGGING¶

- Type:

Account — Can be set only for Account

- Data Type:

Boolean

- Description:

Controls whether telemetry data is collected for budgets.

- Values:

TRUE: Snowflake logs telemetry data that is related to budgets to an event table.FALSE: Snowflake doesn’t log telemetry data that is related to budgets.- Default:

TRUE

ENABLE_DATA_COMPACTION¶

- Type:

Object (for databases, schemas, and Iceberg tables) — Can be set for Account » Database » Schema » Iceberg Table

- Data Type:

Boolean

- Description:

Specifies whether Snowflake should enable data compaction on Snowflake-managed Apache Iceberg™ tables.

- Values:

TRUE: Snowflake performs data compaction on the tables.FALSE: Snowflake doesn’t perform data compaction on the tables.- Default:

TRUE

ENABLE_EGRESS_COST_OPTIMIZER¶

- Type:

Account — Can be set only for Account

- Data Type:

Boolean

- Description:

Enables or disables the Listing Cross-cloud auto-fulfillment Egress cost optimizer.

- Values:

TRUE: Enable the Egress cost optimizer.FALSE: Disable the Egress cost optimizer.- Default:

FALSE

For more information see Auto-fulfillment for listings.

ENABLE_GET_DDL_USE_DATA_TYPE_ALIAS¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Description:

Specifies whether the output returned by the GET_DDL function contains data type synonyms specified in the original DDL statement. Data type synonyms are also called data type aliases.

- Values:

TRUE: Show the data type aliases specified in the original DDL statement.FALSE: Replace the data type aliases specified in the original DDL statement with standard Snowflake data type names.

You can set this parameter to TRUE to generate DDL statements using the GET_DDL function that specify data type aliases as defined in the original SQL statements, which might be required to preserve data model integrity during migrations.

The following are examples of data type aliases:

CHAR is an alias for the VARCHAR data type.

BIGINT is an alias for the NUMBER data type.

DATETIME is an alias for the TIMESTAMP_NTZ data type.

The following statement creates a table using the aliases for the data types:

CREATE TABLE test_get_ddl_aliases(x CHAR, y BIGINT, z DATETIME);

When this parameter is set to FALSE, the GET_DDL function returns the following output:

ALTER SESSION SET ENABLE_GET_DDL_USE_DATA_TYPE_ALIAS = FALSE;

SELECT GET_DDL('TABLE', 'test_get_ddl_aliases');

+------------------------------------------------+

| GET_DDL('TABLE', 'TEST_GET_DDL_ALIASES') |

|------------------------------------------------|

| create or replace TABLE TEST_GET_DDL_ALIASES ( |

| X VARCHAR(1), |

| Y NUMBER(38,0), |

| Z TIMESTAMP_NTZ(9) |

| ); |

+------------------------------------------------+

When this parameter is set to TRUE, the GET_DDL function returns the following output:

ALTER SESSION SET ENABLE_GET_DDL_USE_DATA_TYPE_ALIAS = TRUE;

SELECT GET_DDL('TABLE', 'test_get_ddl_aliases');

+------------------------------------------------+

| GET_DDL('TABLE', 'TEST_GET_DDL_ALIASES') |

|------------------------------------------------|

| create or replace TABLE TEST_GET_DDL_ALIASES ( |

| X CHAR, |

| Y BIGINT, |

| Z DATETIME |

| ); |

+------------------------------------------------+

- Default:

FALSE

ENABLE_IDENTIFIER_FIRST_LOGIN¶

- Type:

Account — Can be set only for Account

- Data Type:

Boolean

- Description:

Determines the login flow for users. When enabled, Snowflake prompts users for their username or email address before presenting authentication methods. For details, see Identifier-first login.

- Values:

TRUE: Snowflake uses an identifier-first login flow to authenticate users.FALSE: Snowflake presents all possible login options, even if those options don’t apply to a particular user.- Default:

FALSE

ENABLE_INTERNAL_STAGES_PRIVATELINK¶

- Type:

Account — Can be set only for Account

- Data Type:

Boolean

- Description:

Specifies whether the SYSTEM$GET_PRIVATELINK_CONFIG function returns the

private-internal-stageskey in the query result. The corresponding value in the query result is used during the configuration process for private connectivity to internal stages. The value of this parameter also affects the behavior of system functions related to private connectivity. For example,TRUEenables SYSTEM$REVOKE_STAGE_PRIVATELINK_ACCESS andFALSEturns off SYSTEM$REVOKE_STAGE_PRIVATELINK_ACCESS.- Values:

TRUE: Returns theprivate-internal-stageskey and value in the query result.FALSE: Doesn’t return theprivate-internal-stageskey and value in the query result.- Default:

FALSE

ENABLE_NOTEBOOK_CREATION_IN_PERSONAL_DB¶

- Type:

User — Can be set for Account > User

- Data Type:

Boolean

- Description:

Specifies whether users can create private notebooks (stored in their personal databases). When TRUE, users in the account can create private notebooks (assuming other necessary privileges are granted).

- Values:

TRUE: Enables users to create private notebooks.FALSE: Prevents users from creating private notebooks.- Default:

FALSE

ENABLE_SPCS_BLOCK_STORAGE_SNOWFLAKE_FULL_ENCRYPTION_ENFORCEMENT¶

- Type:

Account — Can be set only for Account

- Data Type:

Boolean

- Description:

Enables enforcement of SNOWFLAKE_FULL encryption type for Snowpark Container Services block-storage volumes and snapshots.

- Values:

TRUE: Enforces creation of SPCS block-storage volumes and snapshots only with the SNOWFLAKE_FULL encryption type. The SNOWFLAKE_SSE encryption type isn’t permitted. All existing block-storage volumes and snapshots with the SNOWFLAKE_SSE encryption type must be migrated to SNOWFLAKE_FULL before enabling this parameter. Setting the parameter value to TRUE with existing SNOWFLAKE_FULL encrypted volumes or snapshots results in an error.FALSE: Both SNOWFLAKE_SSE and SNOWFLAKE_FULL encryption types are permitted for SPCS block-storage volumes and snapshots in the account.- Default:

FALSE

ENABLE_TAG_PROPAGATION_EVENT_LOGGING¶

- Type:

Account — Can be set only for Account

- Data Type:

Boolean

- Description:

Controls whether telemetry data is collected for automatic tag propagation.

- Values:

TRUE: Snowflake logs telemetry data that is related to tag propagation to an event table.FALSE: Snowflake doesn’t log telemetry data that is related to tag propagation.- Default:

FALSE

ENABLE_TRI_SECRET_AND_REKEY_OPT_OUT_FOR_IMAGE_REPOSITORY¶

- Type:

Account — Can be set only for Account

- Data Type:

Boolean

- Description:

Specifies the choice for the image repository to opt out of Tri-Secret Secure and Periodic rekeying.

- Values:

TRUE: Opts out Tri-Secret Secure and periodic rekeying for the image repository.FALSE: Disallows the creation of an image repository for Tri-Secret Secure and periodic rekeying for accounts. Similarly, disallows enabling Tri-Secret Secure and periodic rekeying for accounts that have enabled image repository.- Default:

FALSE

ENABLE_UNHANDLED_EXCEPTIONS_REPORTING¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Description:

Specifies whether Snowflake may capture – in an event table – log messages or trace event data for unhandled exceptions in procedure or UDF handler code. For more information, see Capturing messages from unhandled exceptions.

- Values:

TRUE: Data about unhandled exceptions is captured as log or trace data if logging and tracing are enabled.FALSE: Data about unhandled exceptions is not captured.- Default:

TRUE

ENABLE_UNLOAD_PHYSICAL_TYPE_OPTIMIZATION¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Description:

Specifies whether to set the schema for unloaded Parquet files based on the logical column data types (that is, the types in the unload SQL query or source table) or on the unloaded column values (that is, the smallest data types and precision that support the values in the output columns of the unload SQL statement or source table).

- Values:

TRUE: The schema of unloaded Parquet data files is determined by the column values in the unload SQL query or source table. Snowflake optimizes table columns by setting the smallest precision that accepts all of the values. The unloader follows this pattern when writing values to Parquet files. The data type and precision of an output column are set to the smallest data type and precision that support its values in the unload SQL statement or source table. Accept this setting for better performance and smaller data files.FALSE: The schema is determined by the logical column data types. Set this value for a consistent output file schema.- Default:

TRUE

ENABLE_UNREDACTED_QUERY_SYNTAX_ERROR¶

- Type:

User — Can be set for Account » User

- Data Type:

Boolean

- Description:

Controls whether query text is redacted if a SQL query fails due to a syntax or parsing error. If

FALSE, the content of a failed query is redacted in the views, pages, and functions that provide a query history.Only users with a role that is granted or inherits the AUDIT privilege can set the ENABLE_UNREDACTED_QUERY_SYNTAX_ERROR parameter.

When using the ALTER USER command to set the parameter to

TRUEfor a particular user, modify the user that you want to see the query text, not the user who executed the query (if those are different users).- Values:

TRUE: Disables the redaction of query text for queries that fail due to a syntax or parsing error.FALSE: Redacts the contents of a query from the views, pages, and functions that provide a query history when a query fails due to a syntax or parsing error.- Default:

FALSE

ENABLE_UNREDACTED_SECURE_OBJECT_ERROR¶

- Type:

User — Can be set for Account » User

- Data Type:

Boolean

- Description:

Controls whether error messages related to secure objects are redacted in metadata. For more information, see Secure objects: Redaction of information in error messages.

Only users with a role that is granted or inherits the AUDIT privilege can set the ENABLE_UNREDACTED_SECURE_OBJECT_ERROR parameter.

When using the ALTER USER command to set the parameter to

TRUEfor a particular user, modify the user that you want to see the redacted error messages in metadata, not the user who caused the error.- Values:

TRUE: Disables the redaction of error messages related to secure objects in metadata.FALSE: Redacts the contents of error messages related to secure objects in metadata.- Default:

FALSE

ENFORCE_NETWORK_RULES_FOR_INTERNAL_STAGES¶

- Type:

Account — Can be set only for Account

- Data Type:

Boolean

- Description:

Specifies whether a network policy that uses network rules can restrict access to AWS internal stages.

This parameter has no effect on network policies that do not use network rules.

This account-level parameter affects both account-level and user-level network policies.

For details about using network policies and network rules to restrict access to AWS internal stages, including the use of this parameter, see Protecting internal stages on AWS.

- Values:

TRUE: Allows network policies that use network rules to restrict access to AWS internal stages. The network rule must also use the appropriateMODEandTYPEto restrict access to the internal stage.FALSE: Network policies never restrict access to internal stages.- Default:

FALSE

ERROR_ON_NONDETERMINISTIC_MERGE¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Description:

Specifies whether to return an error when the MERGE command is used to update or delete a target row that joins multiple source rows and the system cannot determine the action to perform on the target row.

- Values:

TRUE: An error is returned that includes values from one of the target rows that caused the error.FALSE: No error is returned and the merge completes successfully, but the results of the merge are nondeterministic.- Default:

TRUE

ERROR_ON_NONDETERMINISTIC_UPDATE¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Description:

Specifies whether to return an error when the UPDATE command is used to update a target row that joins multiple source rows and the system cannot determine the action to perform on the target row.

- Values:

TRUE: An error is returned that includes values from one of the target rows that caused the error.FALSE: No error is returned and the update completes, but the results of the update are nondeterministic.- Default:

FALSE

EVENT_TABLE¶

- Type:

Object — Can be set for Account » Database

- Data Type:

String

- Description:

Specifies the name of the event table for logging messages from stored procedures and UDFs contained by the object with which the event table is associated.

Associating an event table with a database is available in Enterprise Edition or higher.

- Values:

Any existing event table created by executing the CREATE EVENT TABLE command.

- Default:

None

EXTERNAL_OAUTH_ADD_PRIVILEGED_ROLES_TO_BLOCKED_LIST¶

- Type:

Account — Can be set only for Account

- Data Type:

Boolean

- Description:

Determines whether the ACCOUNTADMIN, ORGADMIN, GLOBALORGADMIN, and SECURITYADMIN roles can be used as the primary role when creating a Snowflake session based on the access token from the External OAuth authorization server.

- Values:

TRUE: Adds the ACCOUNTADMIN, ORGADMIN, GLOBALORGADMIN, and SECURITYADMIN roles to theEXTERNAL_OAUTH_BLOCKED_ROLES_LISTproperty of the External OAuth security integration, which means these roles cannot be used as the primary role when creating a Snowflake session using External OAuth authentication.FALSE: Removes the ACCOUNTADMIN, ORGADMIN, GLOBALORGADMIN, and SECURITYADMIN from the list of blocked roles defined by theEXTERNAL_OAUTH_BLOCKED_ROLES_LISTproperty of the External OAuth security integration.- Default:

TRUE

EXTERNAL_VOLUME¶

Object (for databases, schemas, and Apache Iceberg™ tables) — Can be set for Account » Database » Schema » Iceberg table

- Data Type:

String

- Description:

Specifies the external volume for Apache Iceberg™ tables. For more information, see the Iceberg table documentation.

- Values:

Any valid external volume identifier.

- Default:

None

GEOGRAPHY_OUTPUT_FORMAT¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

String (Constant)

- Description:

Display format for GEOGRAPHY values.

For EWKT and EWKB, the SRID is always 4326 in the output. Refer to the note on EWKT and EWKB handling.

- Values:

GeoJSON,WKT,WKB,EWKT, orEWKB- Default:

GeoJSON

GEOMETRY_OUTPUT_FORMAT¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

String (Constant)

- Description:

Display format for GEOMETRY values.

- Values:

GeoJSON,WKT,WKB,EWKT, orEWKB- Default:

GeoJSON

HYBRID_TABLE_LOCK_TIMEOUT¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Integer

- Description:

Number of seconds to wait while trying to acquire row-level locks on a hybrid table, before timing out and aborting the statement.

- Values:

0to any integer (no limit). A value of0disables lock waiting (that is, the statement must acquire the lock immediately or abort). This value specifies how long the statement will wait for all of the row-level locks it needs to acquire after each execution attempt (1 hour by default). If the statement cannot acquire all of the locks, it can be retried, and the same waiting period is applied.- Default:

3600(1 hour)

See also LOCK_TIMEOUT.

INITIAL_REPLICATION_SIZE_LIMIT_IN_TB¶

- Type:

Account — Can be set only for Account

- Data Type:

Number.

- Description:

Sets the maximum estimated size limit for the initial replication of a primary database to a secondary database (in TB). Set this parameter on any account that stores a secondary database. This size limit helps prevent accounts from accidentally incurring large database replication charges.

To remove the size limit, set the value to

0.0.Note that there is currently no default size limit applied to subsequent refreshes of a secondary database.

- Values:

0.0and above with a scale of at least 1 (e.g.20.5,32.25,33.333, etc.).- Default:

10.0

JDBC_ENABLE_PUT_GET¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Description:

Specifies whether to allow PUT and GET commands access to local file systems.

- Values:

TRUE: JDBC enables PUT and GET commands.FALSE: JDBC disables PUT and GET commands.- Default:

TRUE

JDBC_TREAT_DECIMAL_AS_INT¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Description:

Specifies how JDBC processes columns that have a scale of zero (

0).- Values:

TRUE: JDBC processes a column whose scale is zero as BIGINT.FALSE: JDBC processes a column whose scale is zero as DECIMAL.- Default:

TRUE

JDBC_TREAT_TIMESTAMP_NTZ_AS_UTC¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Description:

Specifies how JDBC processes TIMESTAMP_NTZ values.

By default, when the JDBC driver fetches a value of type TIMESTAMP_NTZ from Snowflake, it converts the value to “wallclock” time using the client JVM timezone.

Users who want to keep UTC timezone for the conversion can set this parameter to

TRUE.This parameter applies only to the JDBC driver.

- Values:

TRUE: The driver uses UTC to get the TIMESTAMP_NTZ value in “wallclock” time.FALSE: The driver uses the client JVM’s current timezone to get the TIMESTAMP_NTZ value in “wallclock” time.- Default:

FALSE

JDBC_USE_SESSION_TIMEZONE¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Description:

Specifies whether the JDBC Driver uses the time zone of the JVM or the time zone of the session (specified by the TIMEZONE parameter) for the

getDate(),getTime(), andgetTimestamp()methods of theResultSetclass.- Values:

TRUE: The JDBC Driver uses the time zone of the session.FALSE: The JDBC Driver uses the time zone of the JVM.- Default:

TRUE

JSON_INDENT¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Integer

- Description:

Specifies the number of blank spaces to indent each new element in JSON output in the session. Also specifies whether to insert newline characters after each element.

- Values:

0to16(a value of

0returns compact output by removing all blank spaces and newline characters from the output)- Default:

2

Note

This parameter does not affect JSON unloaded from a table into a file using the COPY INTO <location> command. The command always unloads JSON data in the NDJSON format:

Each record from the table separated by a newline character.

Within each record, compact formatting (that is, no spaces or newline characters).

JS_TREAT_INTEGER_AS_BIGINT¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Boolean

- Description:

Specifies how the Snowflake Node.js Driver processes numeric columns that have a scale of zero (

0), for example INTEGER or NUMBER(p, 0).- Values:

TRUE: JavaScript processes a column whose scale is zero as Bigint.FALSE: JavaScript processes a column whose scale is zero as Number.- Default:

FALSE

Note

By default, Snowflake INTEGER columns (including BIGINT, NUMBER(p, 0), etc.) are converted to JavaScript’s Number data type. However, the largest legal Snowflake integer values are larger than the largest legal JavaScript Number values. To convert Snowflake INTEGER columns to JavaScript Bigint, which can store larger values than JavaScript Number, set the session parameter JS_TREAT_INTEGER_AS_BIGINT.

For examples of how to use this parameter, see Fetching integer data types as Bigint.

LISTING_AUTO_FULFILLMENT_REPLICATION_REFRESH_SCHEDULE¶

- Type:

Account — Can be set only for Account

- Data Type:

String

- Description:

Sets the time interval used to refresh the application package based data products to other regions.

- Values:

num MINUTES:A value between

1and11520. Must include the unit MINUTES.USING CRON expr time_zone:Specifies a cron expression and time zone for the refresh. Supports a subset of standard cron utility syntax.

For a list of time zones, see the Wikipedia topic list of tz database time zones (link removed). The cron expression consists of the following fields:

# __________ minute (0-59) # | ________ hour (0-23) # | | ______ day of month (1-31, or L) # | | | ____ month (1-12, JAN-DEC) # | | | | __ day of week (0-6, SUN-SAT, or L) # | | | | | # | | | | | * * * * *

The following special characters are supported:

*Wildcard. Specifies any occurrence of the field.

LStands for “last”. When used in the day-of-week field, it allows you to specify constructs such as “the last Friday” (“5L”) of a given month. In the day-of-month field, it specifies the last day of the month.

/nIndicates the nth instance of a given unit of time. Each quanta of time is computed independently. For example, if

4/3is specified in the month field, then the refresh is scheduled for April, July, and October. For example, every three months, starting with the fourth month of the year. The same schedule is maintained in subsequent years. That is, the refresh is not scheduled to run in January (3 months after the October run).

Note

The cron expression currently evaluates against the specified time zone only. Altering the TIMEZONE parameter value for the account (or setting the value at the user or session level) does not change the time zone for the refresh.

The cron expression defines all valid run times for the refresh. Snowflake attempts to refresh listings based on this schedule; however, any valid run time is skipped if a previous run has not completed before the next valid run time starts.

When both a specific day of month and day of week are included in the cron expression, then the refresh is scheduled on days satisfying either the day of month or the day of week. For example,

SCHEDULE = 'USING CRON 0 0 10-20 * TUE,THU UTC'schedules a refresh at 0 a.m. on the tenth to twentieth day of any month and also on any Tuesday or Thursday outside of those dates.

- Default:

None

LOCK_TIMEOUT¶

- Type:

Session — Can be set for Account » User » Session

- Data Type:

Integer

- Description:

Number of seconds to wait while trying to lock a resource, before timing out and aborting the statement.

- Values:

0to any integer (no limit). A value of0disables lock waiting (the statement must acquire the lock immediately or abort). If multiple resources need to be locked by the statement, the timeout applies separately to each lock attempt.- Default:

43200(12 hours)

See also HYBRID_TABLE_LOCK_TIMEOUT.

LOG_LEVEL¶

- Type:

Session — Can be set for Account » User » Session

Object (for databases, schemas, stored procedures, UDFs, dynamic tables, Iceberg tables, tasks, services) — Can be set for:

Account » Database » Schema » Procedure

Account » Database » Schema » Function

Account » Database » Schema » Dynamic table

Account » Database » Schema » Iceberg table (externally managed)

Account » Database » Schema » Task

Account » Database » Schema » Service

- Data Type:

String (Constant)

- Description:

Specifies the severity level of messages that should be ingested and made available in the active event table. Messages at the specified level (and at more severe levels) are ingested. For more information about log levels, see Setting levels for logging, metrics, and tracing.

- Values:

TRACEDEBUGINFOWARNERRORFATALOFF

- Default:

OFF- Additional Notes:

The following table lists the levels of messages ingested when you set the

LOG_LEVELparameter to a level.LOG_LEVEL Parameter Setting

Levels of Messages Ingested

TRACETRACEDEBUGINFOWARNERRORFATAL

DEBUGDEBUGINFOWARNERRORFATAL

INFOINFOWARNERRORFATAL

WARNWARNERRORFATAL

ERRORERRORFATAL

FATALERROR(Only for Java UDFs, Java UDTFs, and Java and Scala stored procedures. For more information, see Setting levels for logging, metrics, and tracing.)FATAL

If this parameter is set in both the session and the object (or schema, database, or account), the more verbose value is used. See How Snowflake determines the level in effect.

LOGIN_IDP_REDIRECT (view-only)¶

- Type:

Account

- Data type:

VARCHAR

- Description:

View-only parameter that contains a JSON object summarizing the values that someone set for the

LOGIN_IDP_REDIRECTaccount property.The JSON object contains a mapping between Snowflake interfaces and SAML security integrations. SAML security integrations are used to implement single sign-on (SSO) authentication. If an interface is mapped to a SAML security integration, then users who access the interface are redirected to the third-party identity provider (IdP) to authenticate; they never see the Snowflake login screen.

For more information about setting the

LOGIN_IDP_REDIRECTaccount property, see ALTER ACCOUNT.

MAX_CONCURRENCY_LEVEL¶

- Type:

Object (for warehouses) — Can be set for Account » Warehouse

- Data Type:

Number

- Description:

Specifies the concurrency level for SQL statements (that is, queries and DML) executed by a warehouse. When the level is reached, the operation performed depends on whether the warehouse is a single-cluster or multi-cluster warehouse:

Single-cluster or multi-cluster (in Maximized mode): Statements are queued until already-allocated resources are freed or additional resources are provisioned, which can be accomplished by increasing the size of the warehouse.

Multi-cluster (in Auto-scale mode): Additional clusters are started.

MAX_CONCURRENCY_LEVEL can be used in conjunction with the STATEMENT_QUEUED_TIMEOUT_IN_SECONDS parameter to ensure a warehouse is never backlogged.

In general, it limits the number of statements that can be executed concurrently by a warehouse cluster, but there are exceptions. In the following cases, the actual number of statements executed concurrently by a warehouse might be more or less than the specified level:

Smaller, more basic statements: More statements might execute concurrently because small statements generally execute on a subset of the available compute resources in a warehouse. This means they only count as a fraction towards the concurrency level.

Larger, more complex statements: Fewer statements might execute concurrently.

- Default:

8

Tip

This value is a default only and can be changed at any time:

Lowering the concurrency level for a warehouse can limit the number of concurrent queries running in a warehouse. When fewer queries are competing for the warehouse’s resources at a given time, a query can potentially be given more resources, which might result in faster query performance, particularly for a large/complex and multi-statement query.

Raising the concurrency level for a warehouse might decrease the compute resources that are available for a statement; however, it does not always limit the total number of concurrent queries that can be executed by the warehouse, nor does it necessarily impact total warehouse performance, which depends on the nature of the queries being executed.

Note that, as described earlier, this parameter impacts multi-cluster warehouses (in Auto-scale mode) because Snowflake automatically starts a new cluster within the multi-cluster warehouse to avoid queuing. Thus, lowering the concurrency level for a multi-cluster warehouse (in Auto-scale mode) potentially increases the number of active clusters at any time.

Also, remember that Snowflake automatically allocates resources for each statement when it is submitted and the allocated amount is dictated by the individual requirements of the statement. Based on this, and through observations of user query patterns over time, we’ve selected a default that balances performance and resource usage.

As such, before changing the default, we recommend that you test the change by adjusting the parameter in small increments and observing the impact against a representative set of your queries.

MAX_DATA_EXTENSION_TIME_IN_DAYS¶

- Type:

Object (for databases, schemas, and tables) — Can be set for Account » Database » Schema » Table

- Data Type:

Integer

- Description:

Maximum number of days Snowflake can extend the data retention period for tables to prevent streams on the tables from becoming stale. By default, if the DATA_RETENTION_TIME_IN_DAYS setting for a source table is less than 14 days, and a stream has not been consumed, Snowflake temporarily extends this period to the stream’s offset, up to a maximum of 14 days, regardless of the Snowflake Edition for your account. The MAX_DATA_EXTENSION_TIME_IN_DAYS parameter enables you to limit this automatic extension period to control storage costs for data retention or for compliance reasons.

This parameter can be set at the account, database, schema, and table levels. Note that setting the parameter at the account or schema level only affects tables for which the parameter has not already been explicitly set at a lower level (e.g. at the table level by the table owner). A value of 0 effectively disables the automatic extension for the specified database, schema, or table. For more information about streams and staleness, see Introduction to streams.

- Values:

0to90(90 days) — a value of0disables the automatic extension of the data retention period. To increase the maximum value for tables in your account, contact Snowflake Support.- Default:

14

Note

This parameter can cause data to be retained longer than the default data retention. Before increasing it, confirm that the new value fits your compliance requirements.

Table retention is not extended for streams on shared tables. If you share a table, ensure that you set the table retention time long enough for your data consumer to consume the stream. If a provider shares a table with, for example, 7 days’ retention and keeps the 14-day default extension, the stream will be stale after 14 days in the provider account and after 7 days in the consumer account.

METRIC_LEVEL¶

- Type:

Session — Can be set for Account » User » Session

Object (for databases, schemas, stored procedures, and UDFs) — Can be set for Account » Database » Schema » Procedure and Account » Database » Schema » Function

- Data Type:

String (Constant)

- Description:

Controls how metrics data is ingested into the event table. For more information about metric levels, see Setting levels for logging, metrics, and tracing.

- Values:

ALL: All metrics data will be recorded in the event table.NONE: No metrics data will be recorded in the event table.- Default:

NONE

MULTI_STATEMENT_COUNT¶

- Type: